What Are Unikernels?

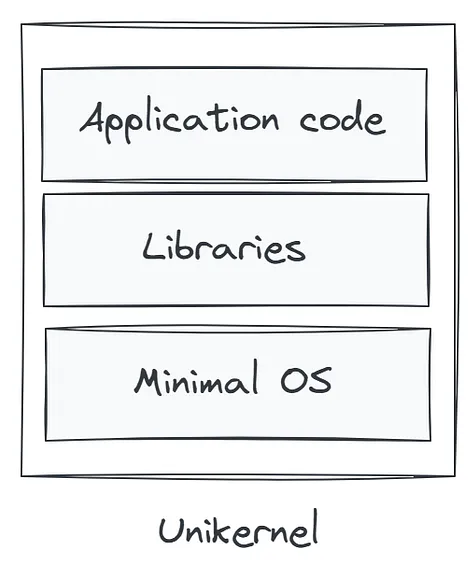

Unikernels represent a revolutionary shift in how we think about operating systems and application deployment. At their core, unikernels are highly specialized, single-purpose machine images that combine application code with only the minimal set of operating system components required to run that specific application. This results in a compact, efficient, and secure runtime environment that is purpose-built for one job and one job only.

Unlike traditional systems, which rely on general-purpose operating systems like Linux or Windows—bringing with them a multitude of features, services, and drivers that may never be used by a specific application—unikernels strip away all unnecessary components. What remains is a lean, self-contained binary that includes just the essential OS features, application logic, and libraries. This binary can then run directly on a hypervisor or even bare-metal hardware, completely eliminating the need for an underlying host operating system.

This architectural philosophy stands in sharp contrast to virtual machines and containers. VMs typically run full-blown guest operating systems, leading to greater memory consumption, longer boot times, and increased attack surface. Containers, though more efficient than VMs, still depend on a shared host kernel and usually pull in base OS images that may include unnecessary packages or services.

Unikernels, by design, eliminate these inefficiencies. Their advantages include:

- Minimal resource footprint: Since the image includes only what’s needed, unikernels consume significantly less memory and CPU.

- Faster boot times: Applications can launch in milliseconds, making unikernels ideal for serverless or highly ephemeral workloads.

- Enhanced security: By reducing the codebase and attack surface, unikernels offer fewer vectors for exploitation.

- Improved performance: The elimination of user-kernel boundaries and overhead from unused services results in optimized execution.

This makes unikernels especially attractive for cloud-native workloads, edge computing, IoT, and serverless platforms—use cases where lightweight, fast, and secure execution environments are critical.

In essence, unikernels take the “do one thing and do it well” philosophy of Unix and apply it to the entire application stack, merging the application with its own customized mini-OS for unprecedented efficiency.

How Are Unnecessary OS Components Stripped Out?

The remarkable efficiency of unikernels is made possible by a foundational architectural concept known as the Library Operating System (Library OS). Unlike traditional monolithic or modular operating systems that provide a full suite of services by default—whether needed or not—the Library OS paradigm decomposes operating system functionalities into modular, reusable libraries.

In this approach, essential OS capabilities such as:

- Networking stacks (TCP/IP)

- File I/O

- Memory allocation

- Thread scheduling

- System calls

- Device drivers

…are all refactored into individual libraries. These libraries can be selectively linked with the application code during the compilation process. As a result, the final compiled unikernel image includes only the specific components required for the application to function—nothing more.

This granular selection mechanism eliminates the overhead associated with traditional general-purpose operating systems. By compiling just the bare minimum into a single-purpose binary, unikernels avoid shipping unnecessary code, drivers, or services that might never be invoked, reducing not just runtime footprint but also potential security vulnerabilities.

In essence, a unikernel treats the operating system more like a toolkit than a runtime environment. Developers explicitly choose which “tools” to include, and the rest are simply left out of the build.

Unikernels Are Single-Process Systems

A defining characteristic of unikernels is their single-process architecture. Unlike general-purpose operating systems, which are built to support multiple concurrent processes and multi-user environments, a unikernel is optimized to execute just one application or service, and do so with maximum efficiency.

This design choice brings several key implications:

-

No process switching overhead: Traditional OSes use context switching to move the CPU’s attention between multiple processes. Each switch incurs CPU cycles and memory management overhead. Since unikernels run only a single process, this costly context switching is eliminated.

-

No distinction between user space and kernel space: General-purpose operating systems maintain strict separation between user space (where application code runs) and kernel space (where core OS logic operates). This separation, while important for security in multi-process environments, adds performance overhead due to mode switching and memory isolation requirements. In unikernels, this separation is unnecessary. Application code and OS libraries run in a unified address space, streamlining execution and improving memory access efficiency.

-

Simplicity and performance: Because the unikernel’s runtime model is so simple—just one process, no kernel/user boundary, and minimal dependencies—execution paths are short, memory access is faster, and performance becomes highly deterministic. This makes unikernels especially well-suited for low-latency environments like network appliances, edge computing, and function-as-a-service platforms.

-

Smaller attack surface: With no shell, no init system, and no ability to spawn additional processes or users, the potential for external intrusion or privilege escalation is vastly reduced. Security improves not through firewalls and access controls, but through elimination.

While the single-process model imposes some limitations—such as difficulty running multiple independent services within the same unikernel—it aligns perfectly with modern microservices architecture. In such designs, each service is deployed independently, making unikernels a natural fit for containerized, cloud-native deployments where minimalism, speed, and isolation are prized.

Running Unikernels on MicroVMs: A Powerful Combination

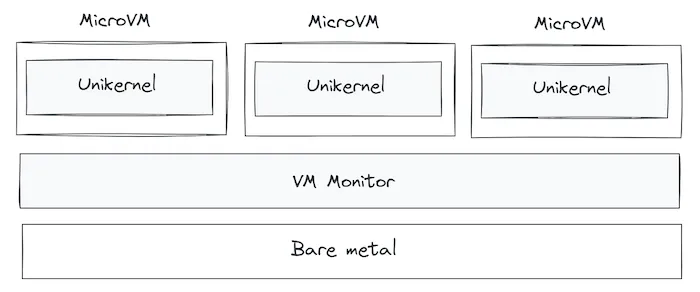

Once a unikernel image is compiled—containing only the essential OS components and application code—it can be executed directly on bare metal hardware or within a virtual machine (VM). However, in practice, the most popular and effective deployment model is to run unikernels inside microVMs, leveraging lightweight virtualization technologies such as Firecracker.

What Are MicroVMs?

MicroVMs are an evolution of traditional virtual machines. Designed with minimalism in mind, they strip away all non-essential components typically found in hypervisors and full-featured VMs—such as device emulation, BIOS layers, and bloated drivers. This results in a drastically reduced boot time, memory footprint, and CPU overhead, without compromising on strong isolation guarantees.

This makes microVMs an excellent fit for serverless platforms, multi-tenant infrastructure, and edge computing, where resources are constrained, workloads are ephemeral, and isolation between applications is paramount.

One of the most widely adopted technologies in this space is Firecracker, an open-source microVM monitor created by AWS. It was purpose-built to power services like AWS Lambda and AWS Fargate, enabling the secure and efficient execution of thousands of isolated functions or containers on the same physical server.

Why Run Unikernels on MicroVMs?

Running unikernels inside microVMs fuses the efficiency of unikernels with the secure isolation of virtualization, forming a robust foundation for modern cloud-native applications. This synergy delivers several compelling benefits:

Blazing Fast Startup

Unikernels have minimal boot requirements, and microVMs like Firecracker can launch new instances in under 125 milliseconds. Together, they enable ultra-low-latency provisioning—ideal for on-demand compute and event-driven workloads.

High Density with Low Overhead

Modern hardware can support thousands of microVMs running unikernels simultaneously. Each consumes only a few megabytes of memory and incurs negligible CPU usage. This enables maximum utilization of server resources, which is critical in high-scale environments.

Strong Security Isolation

While unikernels already have a minimal attack surface due to their single-process design and lack of extraneous system calls, wrapping each one in a microVM adds a second layer of hardware-backed isolation. This allows multiple tenants to safely share the same physical machine without risking data leakage or process interference.

Ideal for Edge & Serverless Environments

Edge computing scenarios demand fast-booting, secure, lightweight compute units that can operate close to end users. Likewise, serverless platforms must rapidly spin up and tear down functions on demand. Unikernel-on-microVM architectures shine in both cases—delivering deterministic performance and rock-solid isolation in small packages.

Deploying unikernels on microVMs provides a powerful architectural pattern that meets the needs of modern cloud-native and edge workloads. With minimal resource consumption, strong security boundaries, and lightning-fast startup times, this combination is redefining how developers think about compute in the age of microservices, multi-tenancy, and event-driven architectures.

Handling a Request in a Unikernel-MicroVM Architecture

In traditional serverless environments, one of the core performance bottlenecks is the cold start time—the delay introduced when a new instance must be initialized to handle an incoming request. However, with the combination of unikernels and microVMs, this delay is drastically minimized.

When an incoming request is received, a VM monitor like Firecracker can launch a new microVM within just a few milliseconds. This microVM is pre-configured to run a unikernel image, which means the minimal operating system and the application logic are bundled into a single binary. As a result, there is virtually no cold boot time. The microVM spins up and immediately begins processing the request, enabling a highly responsive and efficient compute model.

However, in scenarios where certain unikernels contain complex initialization logic—such as loading machine learning models, configuring network layers, or establishing database connections—the system can preemptively create a snapshot of the application’s in-memory state once it reaches a “ready-to-serve” state. This snapshot captures the entire memory and execution context of the application at its peak readiness.

Instead of bootstrapping the unikernel from scratch for each new request, the VM monitor can load the snapshot directly into memory, effectively restoring a pre-warmed instance. This technique reduces latency even further, allowing complex services to offer the same speed and responsiveness as simpler ones.

🔹 Ready-to-serve snapshots preserve the exact memory state of a unikernel after it has finished all initial setup and is ready to accept traffic. They act as time-saving blueprints for new instances.

Unikernels vs Cloudflare Isolates: A Comparative Lens

Although both unikernels and Cloudflare isolates are designed to deliver efficient, fast, and secure execution environments, they differ significantly in architecture, isolation models, and operational philosophies.

Unikernels provide hardware-level isolation. They run as separate virtual machines (or even directly on bare metal), offering robust boundaries between workloads. Each unikernel encapsulates a single application and only the OS components it needs, resulting in minimal attack surfaces and high levels of security isolation. However, this isolation comes at a higher resource cost due to virtualization overhead and a lack of shared memory across instances.

In contrast, Cloudflare isolates offer a more lightweight and language-level isolation approach. Built on the V8 JavaScript engine (the same engine used in Chrome), isolates allow multiple scripts to run within the same OS process but with logical memory separation enforced by the runtime. This allows isolates to spawn nearly instantly, consume very little memory, and perform fast context switches—ideal for ultra-low-latency use cases like edge computing.

Another distinction lies in language support. Cloudflare isolates primarily support JavaScript and languages that compile to WebAssembly. This makes them perfect for frontend developers or lightweight microservices. Meanwhile, unikernels can be authored in a variety of programming languages (depending on the framework used)—such as C, Rust, Go, and OCaml—offering broader applicability across infrastructure-level applications, databases, and even custom protocols.

So how do you choose between the two?

- If your priority is maximum isolation, bare-metal performance, and the ability to use low-level systems programming languages, unikernels running on microVMs are ideal—especially for cloud and serverless backend services.

- If you need rapid scale-out, lightweight scripts, and JavaScript/WebAssembly-based runtime, isolates are better suited—especially for edge functions and web-centric compute.

For those interested in the underpinnings of Firecracker and its role in serverless platforms, the Firecracker Research Paper is a must-read.

You can also explore Unikraft Cloud for an accessible platform to build, deploy, and test unikernel-based workloads with minimal friction.

In essence, both unikernels and isolates are reshaping how we think about secure, lightweight, and scalable execution environments. While they tackle the challenge from different architectural angles, each offers a compelling answer to the growing demand for fast, resource-efficient, and isolated compute models in cloud-native and edge-driven applications.

Final Thoughts: The Future of Lightweight, High-Performance Compute

As cloud-native and edge computing continue to evolve, the need for faster, more secure, and resource-efficient compute environments has never been greater. Unikernels and microVMs represent a bold shift toward minimalism in infrastructure design—stripping away everything but what’s essential, and in doing so, maximizing performance, security, and scalability.

Whether it’s through unikernel-based microVMs offering hardware-level isolation with ultra-fast boot times, or language-level isolates like those used by Cloudflare enabling millisecond-scale executions at the edge, the common goal is clear: to make compute faster, leaner, and more adaptive.

In the future, we can expect these technologies to converge even further with advancements in compiler design, orchestration tooling, and observability frameworks. What’s emerging is a new paradigm of computing—where compute is on-demand, composable, and invisible, yet blazingly fast and deeply optimized.

As developers, architects, and infrastructure engineers, embracing these lightweight models means not only future-proofing our applications but also redefining how software is built, deployed, and scaled in the modern era.

⚡ The future isn’t just serverless—it’s kernel-less, container-less, and even OS-less.