How Cloudflare Achieves Blazing-Fast Serverless at the Edge

Cloudflare has fundamentally reimagined how serverless applications can be deployed and executed at the edge. Unlike traditional cloud platforms that rely heavily on containerized deployments or virtual machines managed via Kubernetes, Cloudflare chose a radically different route—leveraging the V8 engine to create a lightweight, lightning-fast execution environment.

This strategic choice enables developers to run serverless code globally, right at the edge, with astonishingly low latency and virtually zero cold-start time. The result? Code that executes in under 5 milliseconds, giving users a near-instantaneous experience regardless of their location.

Why Not Containers or Virtual Machines?

Containers and VMs are excellent tools for many use cases, offering isolation, scalability, and resource management. However, they come with inherent latency costs. Spinning up a container or VM to serve a request typically incurs a cold start time ranging from 500 milliseconds to as much as 10 seconds. While this is acceptable for some applications, it creates inconsistent and sluggish user experiences in latency-sensitive, real-time edge workloads.

Serverless, by nature, is designed to save cost by shutting down idle instances and spinning them up again on demand. But this spin-up time—the cold start—becomes a bottleneck. For applications like fraud detection, personalization, or API gateways where every millisecond counts, traditional containers just can’t compete.

Cloudflare identified this pain point and optimized its stack accordingly.

Enter V8 Isolates

Cloudflare’s architecture centers around V8 isolates—a feature of the same engine that powers Google Chrome and Node.js. Unlike containers, isolates do not boot up an entire OS or runtime. Instead, they execute scripts within an extremely lightweight, sandboxed environment that can be started in a matter of milliseconds.

This architecture offers several advantages:

- Sub-millisecond Cold Starts: Functions spin up in under 5 ms, ensuring highly responsive experiences.

- True Multitenancy at Scale: Each isolate is completely sandboxed, meaning thousands of functions from different tenants can run independently on the same machine, without shared memory or state.

- Massive Parallelism: Since isolates are lightweight, they consume fewer system resources, allowing Cloudflare to pack more workloads per server without degrading performance.

This approach allows Cloudflare to support real-time serverless applications at a global scale, delivering code execution closer to the end user than ever before. It flips the script on the traditional latency-cost trade-off—minimizing both cold start delays and the infrastructure footprint.

But as with any architectural decision, this comes with trade-offs, such as restrictions in language support and runtime capabilities. We’ll unpack those in a later section.

Inside Cloudflare’s V8 Isolate Architecture: A New Paradigm for Serverless at the Edge

At the heart of Cloudflare’s blazing-fast edge computing platform lies a revolutionary architectural choice: the use of V8 isolates—a lightweight, secure, and efficient runtime environment powered by Google’s V8 engine.

The V8 engine, originally developed for the Chrome browser, is a high-performance runtime designed to execute JavaScript and WebAssembly code at incredible speed. Cloudflare harnesses this engine to create isolates, which are sandboxed execution environments capable of running code independently, securely, and with minimal resource overhead.

Unlike traditional containers or virtual machines, which each require their own OS or isolated runtime environment, V8 isolates share the same underlying OS process. This shared process model dramatically reduces overhead while still maintaining strict memory and execution isolation between workloads.

In practice, this means a single V8 engine instance running within a single OS process can execute thousands of tenant workloads simultaneously, each within its own sandboxed isolate. These workloads have no access to one another’s memory or resources. Cloudflare enforces these boundaries strictly, ensuring tenant data security without the need for heavyweight isolation mechanisms like hypervisors.

Event-Driven, IO-Optimized Execution

Every isolate operates in an event-driven model, making them highly suitable for IO-bound tasks such as network requests, API handling, and lightweight data transformations. The event loop mechanism within V8 ensures that isolates can quickly respond to input without consuming compute cycles during idle periods.

This model is ideal for serverless workloads that are stateless, quick to execute, and often triggered by external events—like HTTP requests, webhook calls, or message queues. These functions warm up in milliseconds (or less), execute their logic, and spin down just as quickly, all within the shared OS process, without incurring the traditional cold-start delays of containerized environments.

Security Through Memory Isolation

Despite running side-by-side in the same OS process, each isolate maintains its own virtual memory space. This architecture ensures that:

- Code from one isolate cannot access or interfere with another’s data.

- All memory access is safely contained and strictly validated by the V8 runtime.

- Even malicious or buggy scripts from one tenant are fully contained, unable to compromise the system or neighboring functions.

This granular isolation mechanism is one of the key reasons Cloudflare’s serverless platform can safely serve a large and diverse set of workloads at the edge, without sacrificing security or performance.

Lightweight by Design

The V8 isolate model offers an unmatched combination of startup speed, resource efficiency, and tenant isolation. By eliminating the need to spin up full OS environments or allocate dedicated resources per function, Cloudflare can deploy millions of functions per server, achieving scale and density that’s simply not feasible with containers or VMs.

This is what allows Cloudflare Workers to feel instantaneous—serving dynamic content, executing user logic, or handling API requests within microseconds, right from the nearest edge location.

The Cold Start Challenge in Traditional Containerized Serverless Deployments

In the realm of traditional serverless architectures—particularly those built on containers or virtual machines—cold start latency presents one of the most persistent performance bottlenecks.

Every time a new request is received for a function that isn’t already “warm” (i.e., preloaded and active), the cloud provider needs to initialize a new container instance. This container must load an entire runtime environment, which includes:

- The operating system (or minimal OS image)

- Language runtimes (e.g., Node.js, Python, Java)

- All required libraries and dependencies

- The actual application or function code

This initialization process introduces latency, known as a “cold start.” Depending on the platform and complexity of the function, cold start delays can range anywhere from a few hundred milliseconds to several seconds. For end-users expecting real-time performance—particularly in interactive applications—this delay can be unacceptable.

Resource Overhead from Isolation

Beyond latency, another significant drawback of traditional containerized deployments is the resource cost of process-level isolation.

Each function instance typically runs in its own isolated container process. This is critical from a security standpoint, as containers (and VMs) provide strong isolation boundaries between workloads. In a multi-tenant environment, this isolation ensures:

- Data confidentiality: Preventing one tenant’s function from accessing another’s memory or runtime state

- Fault isolation: A crash or failure in one container does not cascade to affect others on the same machine

However, this model introduces a heavy infrastructure footprint. Each container needs CPU, memory, and disk resources—even if it’s handling a relatively lightweight workload. When scaled to thousands or millions of concurrent users, the compute demand grows exponentially.

The result is a system that, while secure and functionally flexible, becomes resource-intensive and less agile. Cold starts add latency at the moment users need responsiveness most, and high isolation costs limit scalability and increase operational costs.

The Cloud Trade-Off

Most cloud platforms try to optimize around this problem using warm pools, pre-warmed containers, or just-in-time provisioning. But these are only partial mitigations.

- They don’t eliminate cold starts; they just reduce the likelihood.

- They consume idle resources to keep warm instances running, which adds cost.

- They struggle to scale instantly during unexpected spikes in traffic.

In short, traditional containerized serverless platforms strike a balance between security, flexibility, and performance, but they do so at the cost of startup latency and operational overhead.

This is the challenge Cloudflare set out to solve with its V8 isolate architecture—by reimagining how lightweight, secure, and instantly-executable environments could be deployed globally at the edge. We’ll now explore how that architecture bypasses the cold start problem almost entirely.

The Power of V8 Isolates: Sub-Millisecond Startup and Massive Multi-Tenancy

Unlike traditional containerized architectures that incur substantial overhead from spinning up operating system processes and containers, Cloudflare’s V8 isolate architecture takes a fundamentally different approach—one that dramatically redefines what’s possible in terms of startup latency, efficiency, and scalability at the edge.

At the core of this innovation is the V8 JavaScript engine, originally built by Google for Chrome and now widely adopted to power high-performance serverless and edge computing platforms. This engine provides a runtime capable of executing lightweight, sandboxed environments known as isolates.

Instant Startup with No Containers

When a request hits Cloudflare’s infrastructure, there’s no need to start a new OS-level process or container. The V8 engine is already running, and instead of spawning a new container, it simply instantiates a new isolate within itself. This takes less than a millisecond, often measured in hundreds of microseconds.

This ultra-fast initialization means that cold starts are virtually eliminated—requests can be served almost instantaneously, without any of the usual performance penalties seen in containerized or VM-based architectures.

Lightweight, Scalable Multi-Tenancy

A single V8 engine can run hundreds or even thousands of isolates concurrently, all within the same OS process. Each isolate is fully sandboxed—meaning it has no shared memory and cannot interfere with the execution or data of any other isolate—yet they share the same underlying runtime.

This architectural design brings several major advantages:

- Minimal resource usage: Unlike containers, which require memory, file systems, and sometimes even dedicated CPU cores, isolates consume a fraction of those resources.

- Rapid context switching: Since isolates live within a single process, the costly overhead of inter-process context switching is avoided. This leads to faster execution and lower latency when switching between workloads.

- Massive tenant support: Cloudflare can run thousands of tenant workloads on a single machine at the edge. Each customer’s function spins up and shuts down with virtually no delay or cost, allowing elastic, per-request execution across their global network.

Built for Edge Performance at Global Scale

For a company like Cloudflare—operating across hundreds of edge locations globally, and serving millions of concurrent requests per second—this kind of performance isn’t just desirable, it’s essential. Running each customer function in a traditional container or VM would be prohibitively resource-intensive and inefficient.

The V8 isolate model was explicitly designed to solve this problem: to enable secure, isolated execution of arbitrary code, at scale, on every machine at the edge, while maintaining blazing-fast startup times and near-zero latency overhead.

This architecture is what enables Cloudflare Workers to support edge-native serverless deployments that feel indistinguishable from code running locally—without the cost, complexity, and delay that comes with containers.

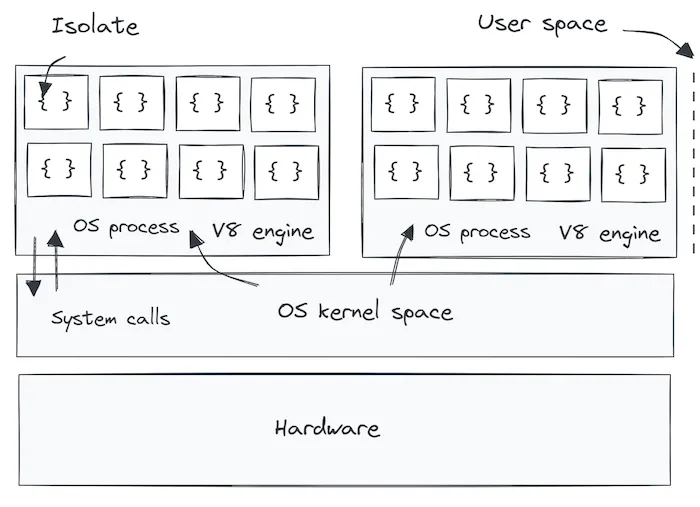

Understanding the Separation: User Space vs. Kernel Space in Operating Systems

To ensure both performance and security, modern operating systems follow a fundamental architectural principle: the separation of user space and kernel space. This distinction lies at the heart of how systems operate safely and efficiently.

At the core of the system lies the kernel space—a privileged area where the operating system’s most critical and sensitive operations take place. This includes managing low-level hardware communication, process scheduling, memory allocation, and handling input/output (I/O) operations. The kernel has full, unrestricted access to the hardware, which is why it must remain protected from external interference.

Surrounding this core is the user space—the environment in which everyday applications like web browsers, code editors, and games operate. These applications do not have direct access to the system’s hardware or kernel-level operations. Instead, they must request services—like reading a file or allocating memory—by invoking system calls, which are carefully mediated by the kernel.

This architectural split serves several vital purposes:

- Security: By isolating user applications from the kernel, the system ensures that even if an application misbehaves or is compromised, it cannot directly corrupt or access critical OS components or hardware.

- Stability: Faults in user space applications are confined to that space. A crashing app won’t take down the entire system.

- Efficiency: The kernel can be finely tuned to manage resources and schedule processes optimally, while user space applications can focus on business logic and user interaction.

For example, when a browser wants to read data from disk, it doesn’t directly interact with the storage hardware. Instead, it sends a system call to the kernel, which performs the I/O operation and returns the result. This controlled flow ensures the system remains secure and well-regulated.

Understanding this division becomes especially important when building or optimizing systems that interact closely with the OS, such as writing performant distributed services, building custom runtimes, or implementing sandboxed environments like Cloudflare’s V8 isolates. In these cases, knowing how and when code transitions from user space to kernel space—and the overhead it can introduce—can significantly influence system performance and architecture design.

Inside the V8 Engine: Isolates, User Space, and OS Interaction

At the heart of Cloudflare’s edge serverless architecture lies the V8 engine, a high-performance JavaScript and WebAssembly runtime originally developed by Google. This engine doesn’t operate in isolation; it sits in the user space of the operating system, managing code execution while interfacing with the underlying kernel space through system calls.

When an application processes a request, the V8 engine creates and manages isolates, lightweight, sandboxed execution contexts. These isolates are designed to run independent workloads without sharing state or memory. While each isolate executes user-defined business logic within the user space, operations that require hardware access, such as network I/O or file access, are passed to the OS kernel via system calls. This structured interaction ensures safety, privilege separation, and efficient execution of asynchronous, I/O-bound tasks through event loops.

Each instance of the V8 engine operates as a user-space process, complete with its own process ID and strict memory boundaries defined by the operating system. This adds an extra layer of security, ensuring that any failures or misbehaviors are contained within that specific process. Within this process, the V8 engine manages and distributes memory to the isolates it hosts.

As more isolates are created—for example, to serve requests from different tenants or applications—the V8 engine may request additional memory from the OS. Despite sharing the same OS process, each isolate is granted a logically distinct memory space. This memory isolation is rigorously enforced by the V8 engine itself, which ensures that one isolate cannot access the data, code, or resources of another.

To maintain this strict separation, each isolate maintains its own heap, a space where its JavaScript objects, functions, closures, and other runtime data are stored. Moreover, garbage collection (GC) is performed independently for each isolate. This localized garbage collection avoids cross-isolate interference and contributes to predictable performance and memory usage.

This design is not merely a technical elegance, it’s a practical necessity. Cloudflare hosts thousands of tenants across its global edge network, running potentially untrusted code side by side on the same physical machine. Without robust isolate-level sandboxing and memory safety, such multi-tenant environments would be vulnerable to data leaks and performance bottlenecks.

Thus, the V8 engine, its isolates, and their tight coordination with the operating system offer a powerful, scalable, and secure foundation for running serverless workloads, one that operates with sub-millisecond startup latency and supports massive multi-tenancy at the edge.

Limitations of the V8 Isolate Architecture

While the V8 isolate architecture presents a groundbreaking solution for executing low-latency, multi-tenant workloads at the edge, it’s not without its constraints. The architecture is highly optimized for speed and lightweight execution, but those optimizations come with trade-offs—particularly when it comes to language support, security isolation, and suitability for heavy workloads.

A significant limitation lies in the language compatibility of the V8 engine. The isolate model is built around the V8 runtime, which natively supports JavaScript and WebAssembly (Wasm). This means that only code written in JavaScript or in languages that can be compiled to Wasm—such as Go, Rust, or C++—can be executed within an isolate. In contrast, traditional containerized or VM-based serverless platforms (like AWS Lambda, Azure Functions, or Google Cloud Functions) offer broad language support out of the box, ranging from Python and Java to Ruby and .NET. For many developers or enterprise teams, especially those locked into legacy codebases or language-specific ecosystems, this can be a dealbreaker.

Another consideration is isolation and compliance. Containers and virtual machines provide process-level isolation, with each workload running in its own separate OS process or virtual environment. This is a key requirement for industries such as finance, government, and healthcare, where strict security standards and compliance regulations (e.g., HIPAA, PCI-DSS) demand physical and logical workload segregation. The V8 isolate model, while extremely efficient, operates within a single process, relying on the V8 engine to enforce memory and runtime isolation between tenants. Although robust, this form of sandboxing does not match the security guarantees provided by full OS-level isolation, making isolates less suitable for highly regulated environments.

The architecture also falls short when it comes to compute-intensive or long-running tasks. Isolates are intentionally constrained—they are engineered for short-lived, I/O-bound operations where low startup latency is critical. To preserve responsiveness and avoid monopolizing system resources, platforms that use isolates typically impose strict CPU and memory limits. This makes isolates ill-suited for workloads that require prolonged computation, heavy data processing, or persistent memory usage. Applications like machine learning training, batch processing, or video transcoding are better served by more traditional deployment models that offer more generous and configurable resource allocations.

While V8 isolates shine in scenarios requiring ultra-low-latency execution, rapid scaling, and high multi-tenancy at the edge, they are not a one-size-fits-all solution. Developers and architects must weigh these limitations against their specific workload needs, regulatory requirements, and operational expectations when choosing a deployment strategy.

Final Thoughts: The Right Tool for the Right Workload

The evolution of serverless computing, whether through V8 isolates, microVMs, or traditional containers—marks a pivotal shift in how we design and deploy applications. Each architecture offers distinct advantages, from the lightning-fast startup times of V8 isolates to the strong isolation guarantees of VMs and the flexibility of containers.

But no solution comes without trade-offs.

V8 isolates are a triumph of speed and scalability, enabling near-instantaneous execution of lightweight, event-driven workloads at the edge. They are ideal for modern web applications, API gateways, A/B testing, and edge personalization at scale. Yet, for enterprises needing language flexibility, deep isolation, or the ability to run long-lived and compute-heavy workloads, containers and VMs remain indispensable.

The key lies in understanding the strengths and limitations of each model and architecting solutions accordingly. There is no universal answer—only the best tool for the job.

As you explore serverless strategies, benchmark, test, and iterate. Leverage isolates where millisecond-level latency and cost efficiency matter most. Choose containers or VMs when you need broader language support, enterprise-grade security, or high-throughput processing.

Architecting at the edge is no longer just about speed, it’s about precision.