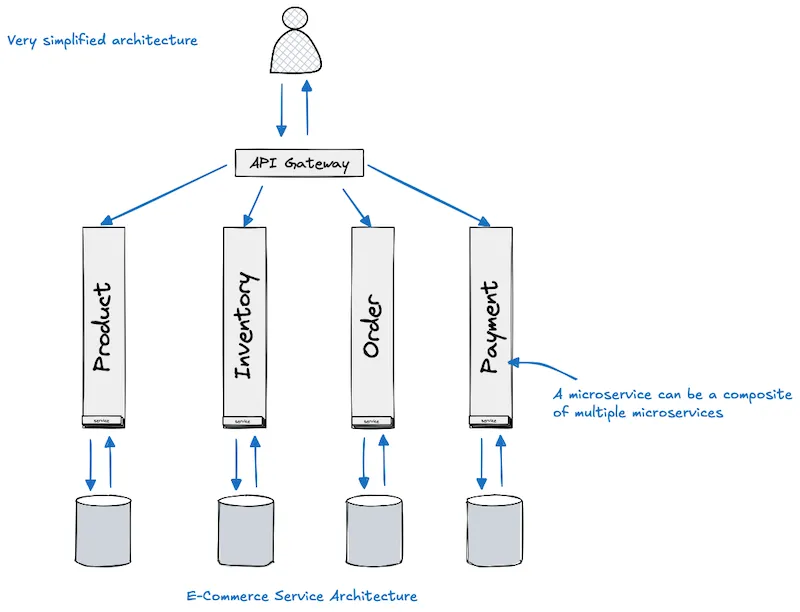

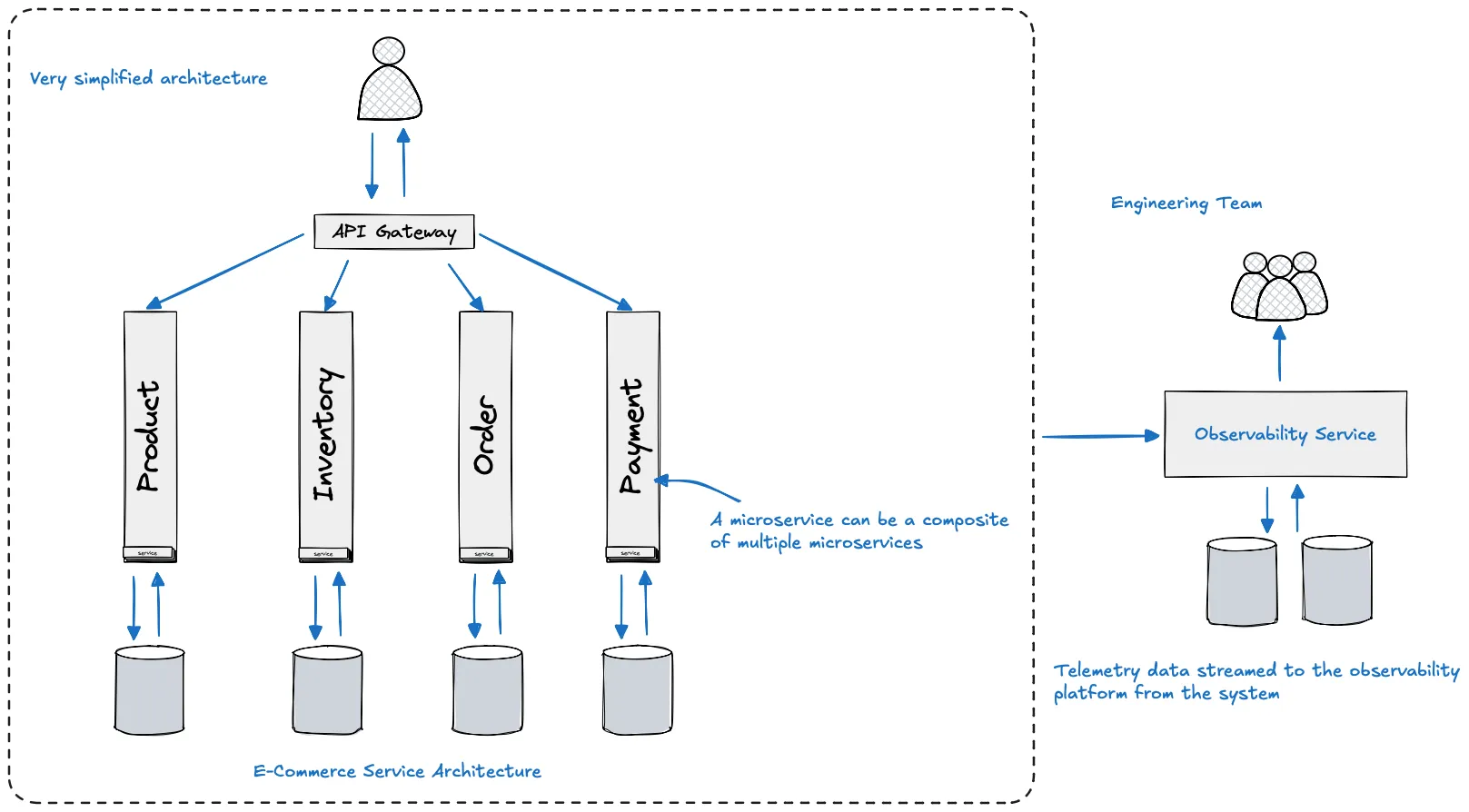

Picture operating a large-scale, globally distributed e-commerce platform—where customer interactions are powered by a dynamic backend of interconnected microservices. These services are modularly designed to encapsulate distinct business capabilities such as the Product Catalog Service, Inventory Service, Order Management Service, and Payment Processing Service. In reality, each of these services might internally consist of even smaller microservices, making the architecture both powerful and inherently complex.

This layered architecture offers flexibility, scalability, and modular ownership—but it also introduces significant operational challenges, especially under peak loads. Imagine a high-traffic scenario—say, during a seasonal sale—where users are adding products to their carts and orders are being created rapidly. Initially, everything seems smooth. The system scales, services communicate, and transactions are processed seamlessly.

Then, something breaks.

Suddenly, a notable percentage of customers start experiencing payment failures. The cart-to-checkout flow begins to degrade. Support tickets spike. Business KPIs like conversion rate and revenue per minute drop.

The challenge? The system isn’t “down”—but it’s not healthy either. Users feel the latency. Transactions silently fail. And with dozens of services involved, diagnosing the root cause is like chasing shadows in a fog.

How do you determine whether it’s the Payment Service? Or perhaps a downstream issue with the Order Service, or an overloaded Inventory check? Could the problem be caused by a network timeout between two microservices? Or maybe a database latency spike affecting a shared data store?

This is the moment when end-to-end observability becomes mission-critical.

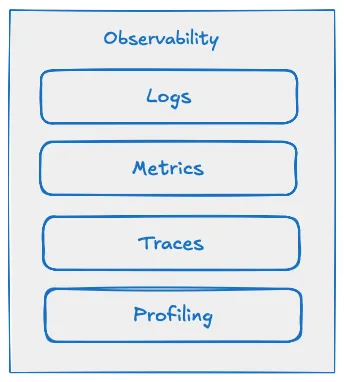

Observability provides deep, real-time visibility into the entire system, empowering teams to detect anomalies, pinpoint bottlenecks, and trace issues across distributed services. It goes beyond traditional monitoring by enabling:

- Correlated logs to diagnose what happened

- Metrics to quantify performance degradation

- Distributed traces to follow the lifecycle of a single request across services

- Profiling and telemetry to identify hidden inefficiencies

In modern systems, observability isn’t a luxury—it’s an essential architecture capability. When implemented well, it doesn’t just help resolve incidents—it builds trust, accelerates recovery, and continuously improves system resilience.

Let’s explore how observability architecture can be designed to serve both engineering teams and the business at scale.

Understanding Observability

Observability is the capability to understand the internal state of a system based solely on the outputs it produces. In the context of distributed systems—where individual components span services, regions, and cloud boundaries—observability becomes essential to ensure system reliability, debuggability, and performance.

At its core, observability enables engineering and operations teams to detect, diagnose, and resolve issues quickly—often before they impact end users. It allows us to move beyond surface-level monitoring and gain deep visibility into the behavior, health, and interactions of system components in real time.

In complex systems like global e-commerce platforms, content streaming services, or social networks, where workloads are deployed across multiple cloud regions and availability zones, having a comprehensive understanding of the system at any moment is non-negotiable. Without observability, we’re effectively operating in the dark—blind to issues until they cascade into user-facing failures.

A highly observable system makes it easy to answer tough questions like:

- What service is causing latency in checkout?

- Why did a specific request fail?

- Where did the system bottleneck originate?

Ultimately, observability is the foundation for delivering on non-functional requirements like:

- Reliability — Can the system consistently meet user expectations?

- Availability — Is the system up and accessible when needed?

- Scalability — Can the system grow and contract based on demand?

- Maintainability — Can teams easily make sense of the system and resolve problems?

To achieve all this, we need real-time insights into production. And that begins with a powerful enabler of observability: telemetry.

What Is Telemetry?

Telemetry is the automated collection, transmission, and aggregation of meaningful data from all parts of a system for analysis and monitoring. It is the lifeblood of observability.

Telemetry provides the signals needed to detect and understand system behavior—especially in distributed environments where a single failure point may affect multiple services. By sending real-time or periodic data from all microservices to a centralized observability platform, teams gain the visibility required to understand and manage complexity at scale.

The purpose of telemetry is to create a feedback loop: systems emit data, platforms collect and analyze it, and humans or machines take action based on those insights.

What Kind of Data Does Telemetry Provide?

Telemetry involves three core signal types, often referred to as the pillars of observability:

- Logs — Textual records of discrete events, system messages, and debug information.

- Metrics — Numeric measurements over time (e.g., CPU usage, request counts, error rates).

- Traces — Representations of the journey a request takes through the system, capturing context and timing across services.

Together, these signals help answer critical questions:

- Logs tell us what happened and when.

- Metrics show us how often and how severe.

- Traces explain where and why something failed or slowed down.

Advanced telemetry may also include:

- Profiles — Snapshots of memory, CPU, and thread usage.

- Events — High-level, time-sensitive state changes in the system.

Telemetry data is foundational for:

- Monitoring system health

- Alerting on anomalies

- Troubleshooting incidents

- Forecasting infrastructure needs

- Auditing and compliance

By instrumenting systems with telemetry at every layer—application, platform, network, and infrastructure—organizations can ensure that when something goes wrong, the system tells them exactly where, why, and how to fix it.

In the next section, we’ll take a closer look at each signal type—logs, metrics, and traces—and how they collectively shape a highly observable system.

Telemetry Data: Logs, Metrics, and Traces

At the heart of observability are three foundational data types: logs, metrics, and traces. These telemetry signals work together to create a real-time narrative of system behavior, enabling teams to identify root causes, assess performance, and continuously improve their services.

Each plays a distinct role in helping teams understand what happened, where it happened, and why.

Logs

Logs are the most granular and narrative-rich form of telemetry data. They capture discrete, timestamped events that occur within an application or system—such as error messages, state changes, input/output operations, and execution paths.

When writing application code, developers intentionally insert log statements to provide visibility into the system’s internal state at key points in the logic. These logs serve two major purposes:

During Development

- Logs provide immediate feedback on the behavior of the codebase.

- They assist in debugging unexpected conditions and understanding application flow.

- Developers use different log levels (e.g.,

INFO,DEBUG,WARN,ERROR,FATAL) to differentiate between routine messages and critical failures.

Example:

[INFO] Order submitted successfully for userId=12345[ERROR] Payment gateway timeout for transactionId=789package main

import ( "errors" "fmt" "log")

type Dish struct { ID int64 Name string}

type Restaurant struct { ID int64 Menu []Dish}

var restaurantStore = map[int64]*Restaurant{}

func deleteDishFromMenu(restaurantID, dishID int64) ([]Dish, error) { restaurant, ok := restaurantStore[restaurantID] if !ok { return nil, fmt.Errorf("Restaurant with id: %d not found", restaurantID) }

var dishToRemove *Dish for i, dish := range restaurant.Menu { if dish.ID == dishID { dishToRemove = &restaurant.Menu[i] // Remove the dish from the menu restaurant.Menu = append(restaurant.Menu[:i], restaurant.Menu[i+1:]...) break } }

if dishToRemove == nil { return nil, fmt.Errorf("Dish with id: %d not found in the menu", dishID) }

log.Printf("Dish to be removed from the menu: %+v\n", *dishToRemove) // In a real application, you might persist the change here return restaurant.Menu, nil}In Production

- Logs offer critical insight into how the system is behaving in real-world conditions.

- They are essential for troubleshooting incidents, auditing workflows, and verifying transactional correctness.

- Centralized logging systems (e.g., ELK Stack, Loki, Splunk) ingest and index logs, enabling teams to search, correlate, and visualize events across services and systems.

Key Characteristics of Effective Logs

- Structured: Logs should use a consistent, machine-readable format (e.g., JSON) to support easy parsing and analysis.

- Contextual: They should include metadata such as request IDs, user IDs, service names, and environment details to support traceability.

- Actionable: Logs should focus on events that provide value—e.g., state transitions, warnings, failures, or notable performance changes.

Why Logs Matter in Observability

- They provide the narrative thread behind metrics and traces.

- They are indispensable for root cause analysis when automated systems detect an anomaly.

- When correlated with other telemetry data, logs complete the picture of what happened and why.

In short, logs are the storyline of your system. Without them, you’re flying blind. With them, you gain precision, accountability, and the ability to diagnose complex failures quickly.

Metrics

Metrics are numerical representations of data points collected over intervals of time. They help us quantitatively assess the health, performance, and efficiency of a system. In the realm of observability, metrics act as the high-level indicators that help teams detect trends, anomalies, and bottlenecks across distributed systems.

Metrics are especially useful for time-series analysis, enabling you to visualize how system behavior evolves. They provide the “pulse” of a system—aggregating values like CPU usage, request rates, and error counts to inform engineers about what’s happening at scale, often in near real-time.

Common Types of Metrics Tracked in Distributed Systems

- Latency / Response Times: Measures the time it takes to complete a request.

- Throughput: Indicates how many requests are handled per second or minute.

- Error Rates: Tracks the percentage of failed requests (e.g., 5xx errors).

- Resource Utilization:

- CPU usage

- Memory consumption

- Disk I/O

- Network bandwidth

- Availability: Often represented as a percentage of uptime over a given period.

- User Events: Application-specific metrics such as purchases completed, files uploaded, or items added to cart.

Why Metrics Matter

Imagine you’ve just deployed a new application module or feature into production.

- Logs may tell you how the internal code behaves during execution.

- Metrics, however, tell you how your system behaves as a whole under real-world usage—particularly from a resource and performance standpoint.

With system metrics, you can:

- Understand how resource-intensive a feature is

- Identify performance regressions after a deployment

- Gauge capacity limits across environments (on-prem, VM, cloud-native)

- Detect early warning signs of infrastructure stress

- Inform scaling decisions and autoscaling policies

Operational and Business Insights from Metrics

Beyond technical health, metrics also provide insights into:

- User engagement trends (e.g., spike in signups or conversions)

- Business SLAs and SLOs (e.g., 99.9% availability targets)

- Regression impact analysis following code changes

- Seasonal or hourly usage patterns that inform business decisions

Above: A Grafana dashboard visualizing production metrics from multiple services.

Flexible and Extensible by Design

There are no hard rules about what constitutes a metric. Any time-series data point that helps you observe, alert, or decide can be captured as a metric. These might include:

- Queue sizes

- API call counts

- Cache hit ratios

- Revenue per minute

- Latency percentiles (p50, p95, p99)

Modern telemetry tools like Prometheus, Grafana, Datadog, and New Relic allow engineers to capture, store, and query metrics at scale, and to visualize them in real-time dashboards that support proactive incident response and data-driven engineering.

Metrics enables the observation of behavior over time, providing a foundation for dashboards, alerts, SLAs, and performance optimization. They’re fast, lightweight, and highly effective for understanding systemic behavior at both macro and micro levels.

In the next section, we’ll explore traces, which help connect the dots between systems and provide the context behind metrics.

Traces

Traces are a cornerstone of distributed system observability. They offer a visual and contextual narrative of how a single request flows through various components of a system—from entry to exit—enabling teams to pinpoint exactly where latency, failures, or bottlenecks occur.

In modern, service-oriented architectures—especially those built on microservices—traces provide end-to-end visibility across numerous interdependent components. Each user request might traverse multiple services: starting at the API Gateway, passing through load balancers, invoking several backend services, querying databases, interacting with caches, and even calling external APIs. Without tracing, it’s nearly impossible to reconstruct the path of a single request across these moving parts.

What Is a Trace?

A trace is a collection of spans—each span representing a unit of work performed in a component or service. These spans are stitched together with metadata that shows the sequence, duration, and hierarchical relationship between operations.

For example, when a user attempts to purchase a product:

- The request might begin at the

ProductCatalogService. - It proceeds to

InventoryServiceto validate stock. - It then calls

PricingServiceto confirm pricing rules. - Finally, it reaches

PaymentServiceto complete the transaction.

Each of these services may be running in different containers, availability zones, or even regions. A trace captures this entire flow, making it easy to:

- Visualize the end-to-end path of a request.

- Identify where time is being spent across services.

- Detect service latencies, failures, and retries.

- Correlate performance issues with specific components.

Why Traces Matter

- Diagnose cross-service latency: Understand where response time is being consumed—whether it’s in database queries, third-party API calls, or synchronous service calls.

- Identify failures and retries: Spot patterns like cascading failures or retry storms that can be difficult to observe through logs alone.

- Reveal architecture insights: See how services are actually connected in runtime—not just how they’re documented.

Observability Without Blind Spots

In a truly observable system, there should be no blind spots. Traces fill in the gaps that logs and metrics alone cannot address. They complement:

- Logs, which help diagnose what happened within a service.

- Metrics, which summarize behavior across time and across systems.

- Traces, which illuminate the journey and context of individual transactions.

This trifecta—logs, metrics, and traces—creates a comprehensive view of system health, behavior, and performance.

Real-World Tools for Tracing

Several open-source and commercial platforms support distributed tracing:

- OpenTelemetry (an emerging open standard for observability)

- Jaeger

- Zipkin

- Honeycomb

- Datadog APM

- AWS X-Ray

- New Relic

These tools provide span visualization, heatmaps, and correlation engines that help teams track down outliers and optimize service interactions.

What Comes Next: Continuous Profiling

While logs, metrics, and traces provide critical operational insights, there’s another key component in the observability stack: Continuous Profiling. This gives teams low-overhead, always-on insight into how code is executing inside processes—unlocking visibility into CPU usage, memory consumption, and runtime hotspots without waiting for incidents.

Let’s explore how profiling completes the observability picture.

Continuous Profiling

Continuous Profiling is the fourth pillar of modern observability. While logs, metrics, and traces provide rich telemetry at the application and system level, they often fall short of revealing the underlying code-level performance characteristics that drive resource usage and efficiency in production. That’s where continuous profiling steps in.

What Is Continuous Profiling?

Continuous profiling is the ongoing, low-overhead collection of fine-grained performance data from running applications in production environments. It enables teams to observe how CPU, memory, disk I/O, and other system resources are being consumed—down to the function or line of code.

It brings the depth and precision of traditional developer tools like profilers and benchmarks into live, distributed systems—without the high costs or risks typically associated with production-level introspection.

How It Relates to Developer Profiling Tools

To better understand continuous profiling, it helps to compare it to familiar concepts:

Code profiling is a local, developer-side practice used to measure how long parts of your application take to execute, how much memory they use, and which functions consume the most resources. This is typically done before committing changes, during testing or pre-production optimization.

Microbenchmarking, on the other hand, focuses even more narrowly—targeting specific functions or blocks of logic in isolation, often to verify performance in controlled environments.

While both are essential during development, they offer only a snapshot in time—often disconnected from the real-world workloads your application will experience in production.

Continuous profiling extends these practices into runtime operations. It collects detailed telemetry directly from live systems, under real load, and over time. This creates a real-time performance map of your entire codebase across environments, services, and infrastructure layers.

What Continuous Profiling Measures

Continuous profilers run in the background, typically with negligible overhead, and collect data such as:

- CPU consumption per function, thread, or service

- Memory allocation patterns (including leaks or excessive churn)

- I/O behavior (e.g., disk latency, buffer flushing)

- Call durations and function execution times

- Kernel vs. user-space activity

- Thread contention or deadlocks

- Garbage collection frequency and impact

This enables teams to answer questions like:

- What part of the codebase is consuming the most CPU over time?

- Are memory leaks emerging under specific workloads?

- Why is this service behaving differently in production than in staging?

- How does the performance of this function scale under varying loads?

Why It Matters for Observability

The power of continuous profiling lies in its depth and continuity:

- Depth: Unlike metrics or traces, which offer summary views or sampling, continuous profiling drills into the internal runtime behavior of applications.

- Continuity: Instead of one-time snapshots, it captures data continuously over time, enabling historical comparisons, regression detection, and long-tail problem analysis.

This makes it ideal for identifying performance regressions, hot paths, and subtle inefficiencies that only emerge under real production traffic.

Complementing Telemetry Data

To put this in context:

- Logs show what happened in the code.

- Metrics show how often and how much.

- Traces show where requests went and how long they took.

- Continuous Profiling shows why resource usage behaves the way it does—at the code level.

When integrated with your telemetry stack, continuous profiling completes the observability picture. It enables teams to not only detect and diagnose issues faster, but also to proactively optimize code for cost, efficiency, and scalability.

Back To Our E-commerce Distributed Service Use Case

Let’s revisit our earlier scenario: a global-scale e-commerce platform architected as a suite of distributed microservices—each responsible for a critical piece of business functionality like product browsing, cart management, payment processing, order fulfillment, and notifications.

Now imagine a situation during a peak shopping event. Customers begin to experience delays or failures during checkout, particularly during the payment step. Revenue is impacted, user satisfaction drops, and the business needs answers—fast.

This is where a well-instrumented observability stack becomes mission-critical.

Step 1: Leverage Logs to Understand Code Behavior

The first step is to inspect logs generated by the PaymentService and any other services that touch the checkout flow. Are there any exceptions? Are third-party payment APIs returning errors or timing out?

Structured, context-rich logs will show us:

- If the payment requests are reaching the service at all

- Whether any recent deployments introduced anomalies

- How errors are being handled or retried

Logs answer the critical question: What happened at the code level?

Step 2: Use Metrics to Analyze System Health

Next, we turn to metrics to assess the system’s overall performance and health. Are we seeing:

- Elevated CPU or memory usage on payment-related services?

- Increased error rates or request latency?

- A drop in orders per minute or transaction success rates?

Resource metrics help us identify if the system is under stress and whether horizontal scaling (e.g., adding more instances) is required. Business-level metrics provide the high-level signal that something is wrong—like a sudden drop in purchases or a rise in abandoned carts.

Metrics answer the question: How is the system performing?

Step 3: Trace the Entire Flow with Distributed Traces

To understand where the breakdown is occurring within the entire flow—from clicking “Place Order” to receiving confirmation—we rely on traces.

Traces reveal:

- The sequence of service calls and how long each took

- Where latency or timeouts occurred

- Which service or component introduced a bottleneck

In our case, a trace might show that requests are stalling between the OrderService and PaymentService, or that the InventoryService is taking too long to respond—causing downstream queuing and retry storms.

Traces answer the question: Where is the latency or failure happening?

Step 4: Zoom In with Continuous Profiling

Once we’ve narrowed down the issue to a specific service or code path, continuous profiling gives us the deepest insight.

Profiling will tell us:

- Which function or method is consuming excess CPU or memory

- Whether a memory leak is degrading performance over time

- If a library dependency is behaving unpredictably under load

Unlike logs, metrics, or traces, continuous profiling gives us the code-level precision needed to make informed optimization decisions. For example, you may discover that a poorly written loop in the PaymentService is causing an exponential increase in CPU utilization under high concurrency.

Continuous profiling answers: Why is this code path inefficient or misbehaving?

Above: Visualizing observability across the request lifecycle, from the moment a user places an order to the successful (or failed) confirmation.

Why Observability is Non-Negotiable

Without observability, we’re left guessing.

- Logs give us the what

- Metrics give us the how much

- Traces give us the where

- Continuous profiling gives us the why

Together, these signals provide a multi-dimensional view of system behavior—bridging the gap between infrastructure, application, and code. This enables faster incident response, improved reliability, and a shared understanding across engineering, operations, and product teams.

Modern distributed systems are too complex and dynamic to rely on traditional monitoring alone. Observability isn’t a luxury—it’s an operational necessity.

In fast-paced environments where user trust and revenue are on the line, observability ensures you can detect, diagnose, and resolve issues before they impact customers.

This is how we build and operate resilient systems in the real world.

Observability-Driven Development

In today’s world of complex, distributed, cloud-native systems, observability is not an afterthought—it is a foundational design principle. Just as test-driven development (TDD) reimagined how we write code with testing top-of-mind, Observability-Driven Development (ODD) is emerging as a mindset that embeds observability considerations into the entire software development lifecycle—from design to deployment and beyond.

To ensure our systems remain understandable, operable, and reliable at scale, we must build observability in, not bolt it on.

The Modern Observability Stack

A robust observability approach requires multiple layers of tooling to capture logs, metrics, traces, and profiling data. These signals provide different but complementary perspectives on system behavior. Here are some widely adopted solutions:

- ELK Stack (Elasticsearch, Logstash, Kibana): A popular open-source solution for centralized log aggregation, search, and visualization.

- Prometheus: A time-series database designed for collecting and querying metrics with powerful alerting capabilities.

- Grafana: A flexible visualization tool used alongside Prometheus to build dashboards for real-time observability.

- Datadog, New Relic, Dynatrace: Commercial SaaS solutions offering end-to-end observability (logs, metrics, traces, dashboards, alerts, etc.).

- OpenTelemetry: An emerging open standard for collecting, processing, and exporting telemetry data from applications and infrastructure.

- Google Cloud Profiler, AWS X-Ray, Azure Monitor: Cloud-native profiling and tracing tools tailored for their respective platforms.

Each of these tools serves a distinct purpose, and together they form an observability fabric that supports developers, SREs, and DevOps teams in maintaining system health, understanding user impact, and continuously improving performance.

Embedding Observability Into the Software Development Lifecycle

Let’s explore how modern distributed services are built with observability as a first-class concern.

1. Designing with Observability in Mind

- Define what success and failure look like in terms of user experience and system behavior.

- Identify critical user journeys (e.g., product purchase, payment, file upload) and plan to trace them end-to-end.

- Instrument services with unique identifiers (e.g., request IDs, trace IDs) for correlation across layers.

At this stage, teams determine the signals they want to capture and what questions their telemetry data should be able to answer.

2. Instrumenting Early and Often

- Add structured logging to critical operations using consistent formats (e.g., JSON) and include contextual metadata (e.g., user ID, session ID, trace ID).

- Use metrics libraries to track business and operational KPIs (e.g., API latency, error rates, signups per minute).

- Leverage OpenTelemetry or other libraries to auto-instrument HTTP clients, gRPC calls, database queries, etc.

This proactive instrumentation ensures visibility is built-in, not added after something breaks.

3. Observability in CI/CD Pipelines

- Validate that logging, metrics, and tracing are working in staging environments before production rollout.

- Include telemetry smoke tests as part of integration testing.

- Monitor deployment health in real time (e.g., with deployment dashboards or canary metrics).

This ensures that observability is not fragile or forgotten during continuous integration and deployment.

4. Operationalizing Observability

- Define SLIs, SLOs, and SLAs that reflect what matters to the business and users.

- Set up dashboards and alerts to detect deviation from baseline performance or behavior.

- Use observability data to inform on-call responses, post-mortems, and capacity planning.

Observability isn’t just for debugging—it’s for driving better operational outcomes, informed product decisions, and organizational learning.

5. Feedback Loops for Continuous Improvement

- Use profiling insights to optimize hot code paths and reduce cloud costs.

- Analyze traces to simplify inter-service communication or identify redundant steps.

- Use metrics and logs to assess the impact of new features or deployments.

With robust observability in place, teams can evolve systems confidently, validate hypotheses quickly, and continuously raise the bar on performance and reliability.

Observability as a Cultural Shift

Observability-Driven Development goes beyond tooling—it’s a cultural and architectural shift:

- Developers write code with traceability in mind.

- Product managers define KPIs that engineering can measure directly.

- SREs and DevOps teams collaborate with developers to close the feedback loop from incident to insight.

In environments where software and systems never stand still, observability isn’t just a feature—it’s a capability. It empowers teams to move fast without sacrificing reliability, to resolve issues before they escalate, and to continuously improve how software behaves in the real world.

How Distributed Services Are Built With Observability at the forefront

In the development of modern distributed systems, observability is no longer an optional afterthought—it is a core design principle embedded from the very beginning of the software lifecycle. When we write code today, we do more than just implement business logic. We simultaneously incorporate observability instrumentation—signals that will help us understand, debug, and optimize the system once it is running in production.

Instrumentation involves embedding telemetry directly into the application through structured logs, metrics, trace context propagation, and sometimes even profiling hooks. A classic example is logging: developers strategically place logs to capture key events, error states, and context identifiers like request IDs. These logs are critical not just for debugging during development, but for gaining insights into application behavior in production environments.

As part of the development phase, it’s equally important to identify performance bottlenecks early. Developers often use micro-benchmarking to test the efficiency of specific functions and leverage profilers to understand CPU or memory consumption. This helps validate that the code not only works, but does so efficiently under typical loads. Beyond that, unit tests and integration tests ensure functional correctness, while static code analysis tools check for vulnerabilities, code duplication, and adherence to style guides. These practices create a stable, predictable foundation for writing robust and observable code.

When the code is pushed to a remote repository, a continuous integration (CI) pipeline is triggered. Here, the same checks executed locally are repeated, often enhanced with additional test suites and validations. CI systems validate builds, re-run static analysis, and execute security scans to maintain the health of the codebase. At this stage, observability hooks—such as metrics exporters or tracing libraries—should be in place and functional, ready for staging deployments.

Once the build passes CI, the code is deployed into a staging or pre-production environment. This is where performance testing begins in earnest. Load testing and stress simulations are applied to mimic peak production traffic. Engineers monitor system behavior using metrics dashboards—tracking latency, throughput, resource utilization, and error rates. These metrics help teams anticipate and resolve issues before they impact real users. Observability isn’t just about reacting to problems—it’s about predicting and preventing them.

Finally, the code is promoted to production. In this environment, real-time monitoring takes center stage. Logs, metrics, and traces are continuously streamed to centralized platforms like Grafana, Kibana, or Datadog. Tools like Sentry or Rollbar monitor for application errors, while alerting platforms notify teams if anomalies are detected—such as increased error rates or infrastructure saturation. At this level, continuous profiling tools add a deeper layer of insight, exposing inefficiencies or memory issues that may not be visible through higher-level telemetry alone.

Throughout this process, observability is not an isolated task—it’s a continuous, lifecycle-wide discipline. From code commit to production deployment, developers and operations teams work in unison to ensure that the system is not just available, but understandable, measurable, and debuggable. Observability becomes the lens through which reliability, performance, and customer experience are monitored and refined.

Building distributed services with observability in mind allows teams to move fast without losing visibility. It enables rapid root cause analysis, proactive performance tuning, and ultimately, greater confidence in delivering high-quality software at scale. In the world of microservices and cloud-native architectures, this level of transparency is not just beneficial—it’s essential.

The Last Mile of Reliability

In an era defined by complexity, velocity, and constant change, observability is no longer a luxury—it’s a necessity. It transforms the way we build, operate, and understand distributed systems. With the right observability practices in place, teams gain not just visibility into their infrastructure, but the ability to act with confidence, diagnose issues faster, and deliver more resilient software. It’s the foundation that empowers engineers to move quickly without sacrificing reliability.

Ultimately, observability is not just a toolset—it’s a mindset. It’s about designing systems that are transparent by default, building feedback loops that drive continuous improvement, and fostering a culture where insight fuels innovation. As systems grow more dynamic and interconnected, only those who can see clearly will lead boldly. So don’t just monitor. Don’t just react. Build with observability at the core—and lead with clarity, not chaos.