Introduction

In the world of distributed systems, communication is everything. As applications grow more modular and decentralized—spanning microservices, agents, and edge devices—the need for reliable, structured, and extensible messaging protocols becomes critical. While established protocols like HTTP, gRPC, and AMQP serve broad purposes, they often come with heavy abstractions or require tightly coupled infrastructure. In contrast, lightweight messaging layers designed for clarity and control are gaining traction in scenarios where simplicity and agility are key.

Enter the Message Control Protocol (MCP). MCP is a pragmatic and minimal protocol for orchestrating communication between agents, services, and systems. Rather than attempting to solve every aspect of distributed messaging, MCP focuses on a clear, purpose-driven format for expressing intent, routing control, and payload delivery. It is particularly useful in AI agent frameworks, command dispatch systems, and environments where messages must be structured, traceable, and independent of heavy transport dependencies.

This article serves as a foundational guide to MCP. We’ll begin by breaking down what MCP is, where it fits in the broader protocol ecosystem, and the fundamental components that define its structure. You’ll learn how MCP messages are formed, transmitted, and interpreted, and we’ll walk through a basic example of sending and receiving an MCP message in code. Whether you’re building a distributed AI system, a lightweight orchestrator, or exploring better ways to design command-driven interactions, this introduction will provide the tools to get started with MCP confidently.

What is MCP?

Message Control Protocol (MCP) is a lightweight, structured protocol designed to facilitate the exchange of control messages between systems, services, or agents. Unlike traditional protocols that focus heavily on data transport, MCP emphasizes intent-driven communication—where each message conveys a clear action, target, and contextual payload. Its goal is to decouple systems through a standardized control language that is easy to parse, route, and act upon, regardless of the underlying transport mechanism.

At its core, MCP defines a consistent schema for expressing commands, events, and responses in distributed environments. Each message typically includes a unique identifier, a message type (such as command, event, or response), a target address or logical endpoint, an action or intent, and an optional payload that carries the relevant data. This structure ensures that both senders and receivers understand the message’s purpose without requiring tightly bound logic or shared memory.

MCP is particularly effective in scenarios involving multi-agent systems, microservices orchestration, remote task execution, and low-latency coordination across distributed nodes. For example, in an AI agent framework, MCP can serve as the lingua franca for agents to issue tasks, request data, or signal state changes. Similarly, in a command routing system, MCP provides a neutral interface through which commands can be forwarded, queued, or transformed based on routing logic or authorization rules.

By adopting MCP, architects and developers gain a protocol that is simple enough to implement quickly, yet flexible enough to extend with custom headers, middleware logic, or even embedded policies for retries, tracing, or access control.

LLM Isolation and the NxM Problem

What is the NxM Problem?

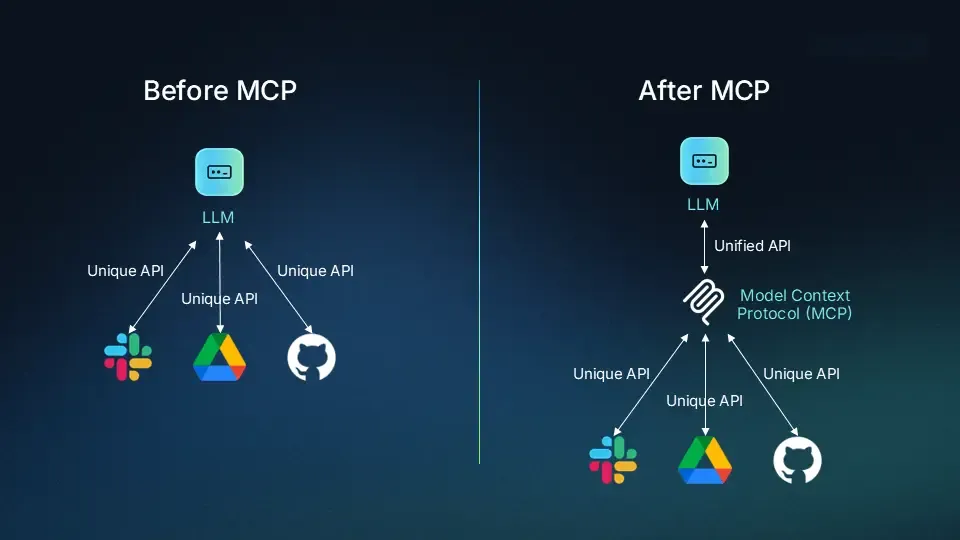

The NxM Problem is a term used to describe the combinatorial explosion of integration complexity that occurs when multiple AI models (N) need to interact with multiple tools, APIs, or services (M).

In a world where each language model—such as GPT-4, Claude, Gemini, or Mistral—has its own unique way of invoking functions or accessing external tools, integrating each model with each tool creates an exponential burden on developers. You don’t just have to build one integration; you have to build and maintain N × M integrations.

For example:

- If you have 5 different LLMs (N) and 10 tools (M), you may face up to 50 separate integrations to support all possible combinations.

- Each integration may require a custom schema, handler logic, and error handling code.

This leads to:

- Duplicated effort across teams

- Inconsistent behavior across tools and models

- High maintenance overhead as tools and models change

The NxM Problem illustrates why standardization—like what MCP offers—is critical. By introducing a common protocol, you can shift from N×M individual connections to N+M unified connections, where any model can talk to any tool using the same message format.

LLM Isolation

Large Language Models (LLMs) are incredibly powerful, but they typically operate in a vacuum—disconnected from the real-time data, tools, and systems that drive decision-making in modern applications. This isolation creates friction not only for casual users seeking contextual answers but also for developers and enterprises trying to build meaningful, scalable integrations.

For end users, this often results in what we might call the “copy-and-paste tango”—manually collecting information from disparate sources, feeding it into an LLM’s interface, and then applying the results in another system. While some LLMs have made strides toward real-world integration—like Anthropic’s Sonnet 3.x with “Computer Use” or GPT-4 with web browsing—these solutions remain limited. They often lack native support for structured tools, data sources, or APIs, forcing users to work around the model’s limitations rather than through them.

For developers and enterprise architects, the problem is even more pronounced: the NxM problem. Here, N represents the growing number of LLMs, and M represents the expanding set of tools, APIs, and services that businesses rely on. Each LLM provider tends to offer their own custom syntax and methods for integrating tools. Multiply that across several models and several systems, and you’re quickly facing an unmanageable web of one-off connections.

This NxM complexity manifests in several key ways:

- Redundant development effort: Teams must repeatedly solve the same integration challenges every time they add a new model or connect to a different service. Each LLM has its own quirks, requiring custom code, schemas, and logic.

- Excessive maintenance overhead: As models and tools evolve, previously working integrations can break, especially in the absence of a consistent protocol. Updating, debugging, and maintaining these connections becomes a never-ending task.

- Fragmented behavior: Without a common standard, different tools may behave inconsistently across platforms, leading to unpredictable user experiences and increased support burden.

How MCP Helps: Standardizing the Interface Between LLMs and Tools

MCP doesn’t aim to eliminate existing integration methods; instead, it builds upon them—especially function calling, which is the emerging standard for enabling LLMs to invoke tools. MCP formalizes and standardizes how function calls are defined, discovered, and executed, creating a reliable, interoperable contract between models and external capabilities.

Relationship Between Function Calling and MCP

Function calling allows LLMs to invoke tools or operations by generating structured outputs—typically JSON objects—that describe which function to call and with what parameters. While function calling has proven useful, it’s also tightly coupled to specific model APIs. This limits reusability and adds friction for developers supporting multiple models.

With traditional function calling, developers must:

- Define model-specific schemas for each function, including its parameters and expected output.

- Implement handler logic for those functions and bind it to the model’s runtime environment.

- Reimplement or duplicate logic for each model (e.g., GPT, Claude, Gemini), even if the underlying functionality is the same.

MCP standardizes and simplifies this process by:

- Providing a universal schema for defining tools and their capabilities, independent of any specific LLM.

- Supporting dynamic discovery and execution of tools at runtime, allowing agents or systems to introspect what’s available and act accordingly.

- Offering a consistent, model-agnostic message format that makes tools and actions portable across AI apps without reimplementation.

In essence, MCP acts as a control layer that abstracts tool execution away from the model itself. This makes it dramatically easier to scale integrations, reuse capabilities, and future-proof your architecture as both LLMs and tools continue to evolve.

Fundamental Concepts

To effectively leverage MCP (Message Control Protocol), it’s important to understand the building blocks that define how messages are structured, categorized, and interpreted. MCP was designed for clarity, extensibility, and minimal coupling—making it ideal for systems that need predictable message handling and tool interoperability. This section explores the key fundamentals that form the foundation of MCP.

Message Structure: Headers, Body, and Metadata

An MCP message is composed of three primary components: headers, body, and metadata.

- Headers carry essential routing and control information. This includes fields like

id(a unique identifier),type(the nature of the message),target(the intended recipient or system endpoint),source, and optionallyreply_tofor asynchronous response handling. - Body represents the actionable payload of the message. For commands, this is typically the set of parameters required to invoke a function or perform an operation. For responses, it includes results or error details.

- Metadata is optional but powerful. It may include contextual elements such as timestamps, correlation IDs for tracing, priority levels, or versioning tags. This allows for rich observability and enhanced middleware handling (e.g., logging, retries, authentication).

A basic example MCP message might look like this:

{ "id": "e8f13bfc-2357-4be2-8f14-d9b0029f95b3", "type": "command", "target": "agent://task-executor", "action": "run_task", "payload": { "taskId": "daily_backup" }, "timestamp": "2025-06-21T12:30:00Z"}This structure ensures both human and machine readability while enabling seamless routing and execution across diverse systems.

Types of Messages: Command, Event, Response

MCP supports a small set of message types, each serving a distinct purpose in system coordination:

- Command: Directives sent to an agent or service to perform a specific task. Commands are typically actionable and expect either an acknowledgment or a response.

- Event: Notifications or broadcasts that describe something that has happened, often without requiring a response. Events are used for logging, system-wide triggers, or pub/sub-style updates.

- Response: Replies to commands or queries, carrying the outcome, status, or data resulting from execution. These can be synchronous or asynchronous, and often contain error handling or success confirmation.

By clearly distinguishing between message types, MCP ensures that systems can handle incoming messages in a structured, deterministic way.

Role of MCP in Orchestrating Agent or System Behavior

MCP’s purpose is to coordinate behavior across agents or subsystems without enforcing tight coupling. In agent-based environments, messages are the lingua franca—tools by which agents express intent, share outcomes, and request action from others.

For example:

- A task planner agent might send a

commandto a task runner agent to execute a script. - Upon completion, the runner sends a

responsewith the results. - Simultaneously, the system might emit an

eventto indicate job completion, triggering a downstream logging or analytics function.

This pattern enables highly flexible and modular architectures where responsibility is distributed, yet behavior remains synchronized through structured messaging.

Stateless vs. Stateful Messaging in MCP

MCP is inherently stateless by default, meaning that each message is treated independently and contains all necessary context for execution. This makes MCP messages easy to route, cache, inspect, and retry without depending on shared memory or hidden session state.

However, MCP does not prohibit stateful behavior. By using message identifiers, correlation IDs, or custom headers, developers can implement patterns like:

- Request/response pairs using

reply_toheaders - Conversations or transactional workflows via correlation tokens

- Session-based interactions where state is externally managed but referenced through message fields

This dual support for statelessness and externally managed state makes MCP well-suited for both ephemeral workloads and long-running processes.

Core Components and Architecture of MCP

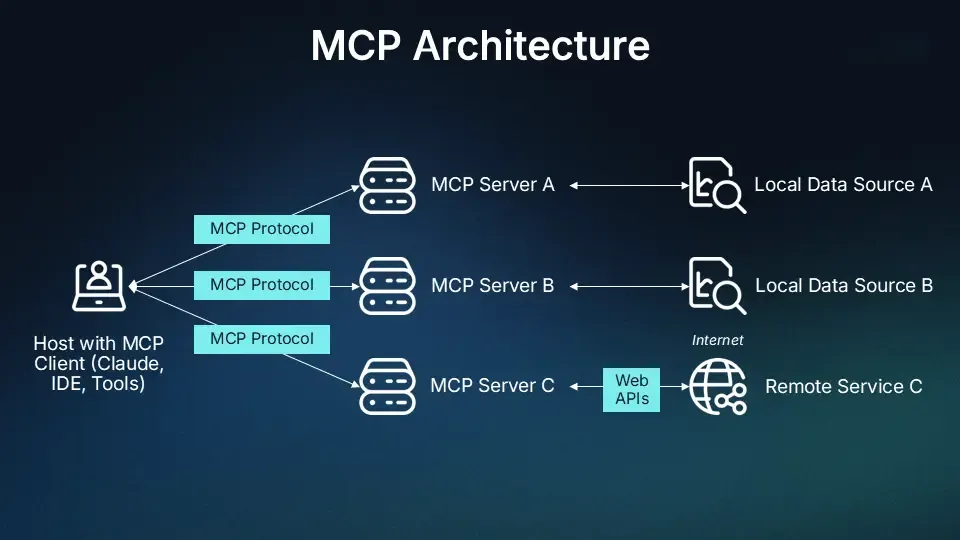

The Model Context Protocol (MCP) is designed to connect Large Language Models (LLMs) with external tools, services, and systems through a consistent and structured interface. At the heart of this architecture are four primary components: the host application, the MCP client, the MCP server, and the transport layer. Together, they create a dynamic environment where AI applications can perform meaningful, real-world tasks beyond simple conversation.

Host Application

The host application is the interface through which users interact with an LLM. This can be a desktop app like Claude Desktop, an AI-enhanced development environment such as Cursor, or a browser-based LLM chat interface. The host is responsible for initiating connections and relaying user interactions to the MCP layer.

MCP Client

The MCP client resides within the host application and acts as a bridge between the LLM and external MCP servers. It handles message formatting, transport communication, and capability registration. The MCP client translates the model’s intent into structured MCP requests, sending them to the appropriate server and returning responses back to the host application for display or further processing.

For example, in Claude Desktop, the MCP client is embedded within the application, silently managing connections to any configured local or remote MCP servers without requiring user intervention.

MCP Server

An MCP server provides context and capabilities to AI applications. It exposes specific tools or integrations—such as access to a GitHub repository, PostgreSQL database, file system, or internal API—by registering functions that the LLM can invoke. Each MCP server typically serves a focused role, offering domain-specific functions or access to a particular external resource.

You might run multiple MCP servers in parallel, each representing a unique service or integration layer. This modular approach ensures flexibility and scalability without overwhelming the host application with tightly coupled dependencies.

Transport Layer

The transport layer defines how communication flows between the MCP client and the MCP server. MCP currently supports two primary transport methods:

- STDIO (Standard Input/Output): This is used for local communication, where the MCP server runs alongside the host application. It’s fast, simple, and ideal for integrations that do not require network communication.

- HTTP + SSE (Server-Sent Events): This approach is used for remote integrations. HTTP is used to initiate requests, while SSE enables the server to push real-time responses or streaming data back to the client.

Both transport types use JSON-RPC 2.0 as the underlying message format. JSON-RPC provides a standardized protocol for issuing method calls, handling parameters, and processing responses or notifications. It ensures consistency across different implementations and simplifies debugging and interoperability.

How MCP Works

When a user interacts with an AI-powered host application that supports MCP, a coordinated set of actions happens beneath the surface to enable real-time, intelligent interactions with tools and services. Here’s how the protocol enables smooth operation between components.

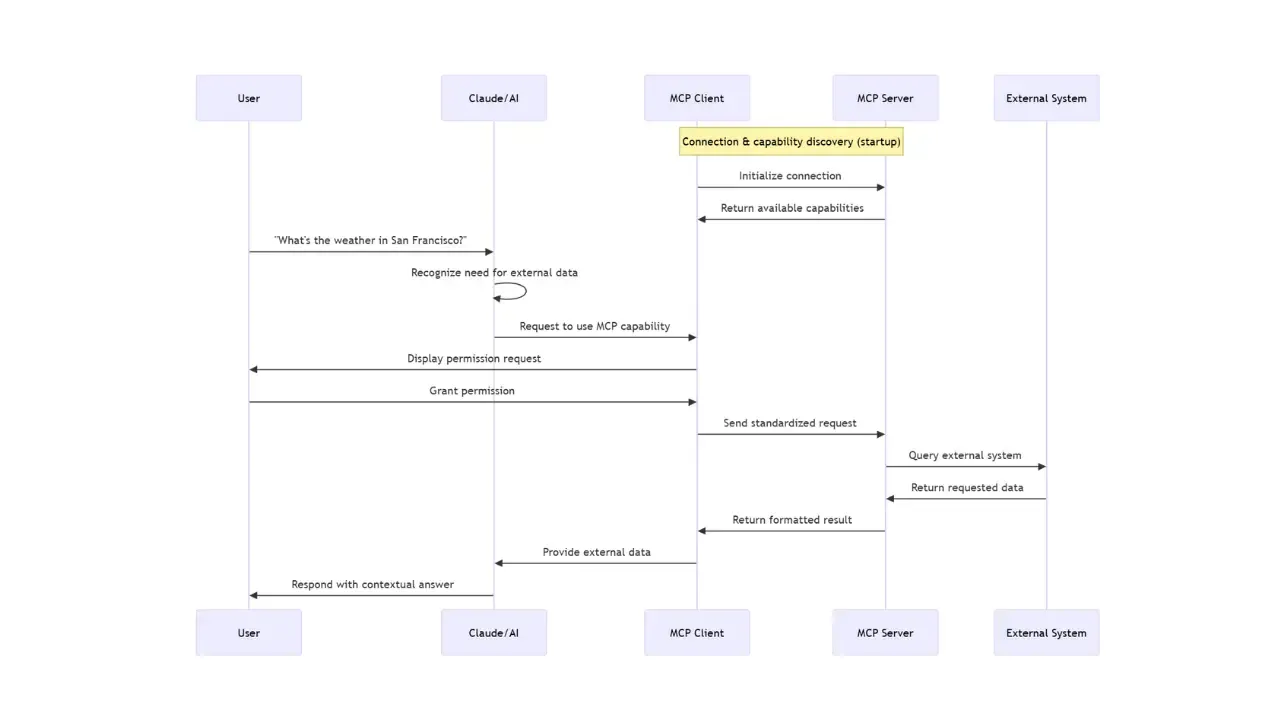

Protocol Handshake

The initial connection process is essential for establishing trust and capability between client and server:

-

Initial Connection: Upon startup, the MCP client embedded in the host application (e.g., Claude Desktop) connects to the configured list of MCP servers—either local or remote.

-

Capability Discovery: The client sends a standardized query to each server asking, “What tools or functions do you offer?” In response, each server returns a list of capabilities, including metadata such as function names, descriptions, input parameters, and output formats.

-

Registration: The MCP client registers all discovered capabilities and makes them available to the LLM. These capabilities are then dynamically exposed during the user’s session, allowing the LLM to invoke real-world tools when relevant.

This handshake process ensures that the LLM is not only aware of the tools available in its environment but also able to use them intelligently, securely, and contextually.

From User Request to External Data: How MCP Enables Real-Time Intelligence

When an AI model is asked a question that requires current, external data—such as weather, stock prices, or internal enterprise metrics—it must go beyond its training data. The Model Context Protocol (MCP) enables this seamless connection between a language model and real-world information by orchestrating a structured, permissioned, and real-time data flow between user input and external systems.

Here’s a breakdown of what happens under the hood when a user asks a question like, “What’s the weather like in San Francisco today?”

Need Recognition

The host application receives the user’s request and passes it to the LLM. The model analyzes the prompt and determines that the question cannot be answered using static knowledge alone. It identifies the need for external, up-to-date information—in this case, a live weather report.

Tool or Resource Selection

Based on the registered capabilities (discovered during the MCP handshake), the MCP client recognizes that an appropriate tool exists—such as a weather service integration exposed by an MCP server. The system selects the relevant capability required to satisfy the user’s request.

Permission Request

To ensure transparency and control, the host application may prompt the user for permission. This prompt clarifies what tool or resource is being accessed, providing the user with a choice to proceed. This step can be customized or omitted based on system policies or trust configurations.

Information Exchange

With permission granted, the MCP client constructs a standardized request in JSON-RPC 2.0 format and sends it to the appropriate MCP server via the configured transport (STDIO or HTTP+SSE). This request includes any necessary parameters, such as the city name and date for the weather query.

External Processing

The MCP server receives the request and executes the appropriate function—whether that means calling an API, querying a database, reading a file, or any other domain-specific task. The server then packages the results in a structured response message, ensuring compatibility and interpretability by the client.

Result Return

The structured response is transmitted back to the MCP client. Thanks to MCP’s consistent message format, the client can reliably extract the information and present it to the host application or model runtime.

Context Integration

The response data is integrated into the LLM’s active context window. This gives the model access to real-time data without retraining or fine-tuning. The model interprets the response, blends it with the user’s original prompt, and constructs a meaningful reply.

Response Generation

Finally, the model generates a human-readable answer—e.g., “It’s currently 68°F and sunny in San Francisco”—as if it had the knowledge all along. The user receives a seamless, accurate, and contextually aware reply.

This entire process, from intent detection to response delivery, typically happens in seconds. MCP enables LLMs to behave more intelligently and responsively without compromising modularity or requiring hardcoded logic. It gives AI applications the illusion of omniscience—while keeping the underlying architecture transparent, extensible, and grounded in real-time system capabilities.

Reference MCP Servers: Extending AI with Real-World Capabilities

A key strength of the Model Context Protocol (MCP) is its modular design, allowing developers to expose powerful capabilities to LLMs without deeply integrating those capabilities into the AI model or host application itself. This is achieved through MCP servers—standalone processes that act as bridges between AI applications and external tools, systems, or APIs.

Whether you’re building internal tools, connecting to public APIs, or integrating with system resources, MCP servers provide a plug-and-play interface for expanding what AI can do. Each server adheres to the MCP standard and exposes clearly defined functions that can be discovered, queried, and invoked in real time.

The MCP community maintains a growing set of reference servers designed to help developers get started quickly. These servers demonstrate practical use cases and offer templates for building your own.

Below are some of the key reference servers available:

Filesystem Server

- Description: Exposes the local file system to AI applications, allowing models to read, write, and list files within defined boundaries.

- Use Case: Useful for enabling document summarization, log file analysis, or AI-assisted file generation.

- Link: mcp-filesystem-server

GitHub Server

- Description: Connects to the GitHub API, enabling actions like listing repositories, fetching README files, or querying issues.

- Use Case: Great for AI developer assistants, code review bots, or documentation helpers.

- Link: mcp-github-server

PostgreSQL Server

- Description: Provides access to PostgreSQL databases, allowing read-only or controlled query execution.

- Use Case: Ideal for querying analytics databases, generating reports, or building AI dashboards.

- Link: mcp-postgresql-server

These reference servers are open source, modular, and ready to use or customize. You can browse all available servers, review their capabilities, and even contribute your own by visiting the official MCP GitHub repository:

👉 Explore the full list of MCP servers: https://github.com/modelcontextprotocol/servers

Common Pitfalls and Best Practices

As with any protocol-driven architecture, implementing MCP (Model Context Protocol) effectively requires more than just sending and receiving messages. While MCP is designed to be lightweight and straightforward, developers can still encounter pitfalls that compromise stability, flexibility, or maintainability. The good news is that with a few best practices in mind, these issues can be avoided early in the design process.

Ensuring Message Integrity

Pitfall: Messages that are malformed, missing required fields, or corrupted in transit can cause silent failures or unpredictable behavior—especially in distributed environments where retries or error handling may mask the root issue.

Best Practice: Always validate MCP messages against a consistent schema before processing. This includes checking for required fields such as id, type, target, action, and payload. Use JSON schema validation libraries in your chosen language to enforce structure and detect missing or invalid values early.

Additionally, ensure that timestamps, correlation IDs, and UUIDs are properly generated and consistently formatted. This improves not only message traceability but also resilience in systems with high message throughput or complex routing paths.

Avoiding Tight Coupling with Payload Logic

Pitfall: When the message payload becomes tightly coupled to the business logic or the model’s internal assumptions, flexibility and reuse are compromised. This often results in brittle systems that are difficult to refactor or extend.

Best Practice: Keep the payload structure decoupled from domain-specific implementations. Think of MCP messages as contracts, not function calls. Design payloads to be declarative and self-descriptive, rather than encoded with rigid logic or assumptions about the receiving system.

This design approach makes it easier to:

- Swap out or upgrade MCP servers without changing client logic

- Route the same message to different handlers for testing or fallback

- Support multiple versions or message schemas through backward compatibility

Focus on defining clear interfaces between systems, with loosely coupled message contracts that can evolve independently.

Logging and Tracing for Observability

Pitfall: In distributed AI systems powered by MCP, it’s easy to lose visibility into what messages were sent, which servers responded, or where failures occurred. Without proper observability, debugging becomes guesswork, and system trust deteriorates.

Best Practice: Implement comprehensive logging and distributed tracing for all MCP message flows. This includes:

- Logging message IDs, timestamps, and action types on send and receive

- Capturing server responses, including errors and durations

- Tagging logs with correlation IDs to trace entire request lifecycles

Use structured logging formats (e.g., JSON logs) to make it easier to analyze data in observability tools like ELK, Grafana, or OpenTelemetry.

If your MCP client or server interacts with multiple downstream systems, tracing spans should be propagated through headers or metadata. This allows you to visualize entire message journeys across components—from user request to external action and back—helping pinpoint latency, errors, or bottlenecks.

When (and When Not) to Use MCP

Model Context Protocol (MCP) provides a powerful and flexible way to connect LLMs to external tools, systems, and services. Its standardized message structure, lightweight transport options, and decoupled architecture make it ideal for a wide range of use cases. However, MCP isn’t a silver bullet. Like any tool, it excels in specific contexts and may not be the best fit for others. Understanding when to use MCP—and when not to—will help you make smarter architectural decisions.

Ideal Scenarios for MCP

MCP shines in environments that require tool orchestration, extensibility, and low friction integration between AI applications and external systems. Some of the best-fit scenarios include:

-

Multi-Agent Systems: When multiple AI agents or services need to communicate through a shared protocol to coordinate actions, MCP provides a standardized, composable interface. Agents can publish capabilities, send commands, and return results with minimal coupling.

-

LLM Tooling and Contextual Plugins: If you want to give an LLM access to real-world capabilities like querying databases, fetching documents, or invoking business workflows, MCP provides a clean interface for exposing these tools in a way the model can understand and reason about.

-

Decoupled Command Dispatching: MCP is perfect for systems where commands need to be routed to independent services that can evolve independently. Its message-based nature makes it easy to introduce retries, fallbacks, or parallel processing without tightly coupling logic.

-

Secure, Auditable Extensions for AI Apps: Since MCP uses structured messaging and supports transport-agnostic communication, it’s easy to monitor, trace, and audit interactions. This makes it valuable for enterprise environments with compliance or observability requirements.

-

Prototype and Plug-and-Play Integration: MCP servers can be quickly developed and deployed, making it ideal for prototyping integrations or experimenting with new capabilities without needing to recompile models or redesign core applications.

When MCP May Not Be the Right Fit

While MCP offers tremendous flexibility, it’s not always the best option—especially when other protocols or architectures provide better performance or simpler semantics for the task at hand.

-

High-Performance Low-Latency Services: If your use case demands ultra-low latency, real-time streaming, or binary efficiency (e.g., video/audio pipelines, IoT control systems), gRPC or WebSockets may be better suited due to their lower overhead and binary protocol formats.

-

Traditional API Consumption: When an AI application just needs to call a well-documented, RESTful web service, wrapping that service in an MCP server might introduce unnecessary complexity. In these cases, direct HTTP integration (perhaps via the model’s built-in function calling) may be simpler and more efficient.

-

Event-Driven Streaming Architectures: For systems that are fundamentally driven by publish/subscribe semantics—like real-time dashboards, event logs, or Kafka-based messaging—AMQP, NATS, or Kafka provide more native support for high-throughput event streaming.

-

Tightly Coupled Internal Services: When you’re working within a monolithic application or a tightly coupled microservice architecture, MCP’s abstraction layer may be overkill. Direct method calls, service contracts, or shared memory models can be more appropriate in such tightly controlled environments.

Conclusion

As AI systems become more powerful and embedded in everyday workflows, the ability to bridge the gap between language models and real-world tools is no longer optional—it’s essential. The Model Context Protocol (MCP) offers a lightweight, standardized approach to enable that bridge, empowering developers to expose functions, services, and capabilities to LLMs through a consistent, extensible message format.

In this article, I introduced MCP’s core concepts, including its message structure, architectural components, ideal use cases, and operational flow. We explored how MCP empowers agents and AI applications to move beyond static text generation into actionable, integrated intelligence—all while preserving modularity, transparency, and developer control.

If you’re just starting out, I encourage you to experiment with one of the reference MCP servers, explore the protocol’s JSON-RPC format, and see how easily you can connect a simple function to a language model through MCP. Whether you’re building a custom integration for internal tools or prototyping the next generation of AI assistants, MCP offers a solid foundation to build upon.

This is just the beginning. In future posts, I’ll go deeper into building your own MCP server, adding custom capabilities, and implementing advanced features like streaming responses, access control, and multi-agent orchestration.