Toward a Unified API Lifecycle Model: A Foundation for Enterprise Maturity

In today’s increasingly interconnected and digitally enabled business landscape, APIs serve as the connective tissue of modern enterprise systems. They expose capabilities, orchestrate services, enable interoperability, and are fundamental to innovation. However, as organizations expand their API footprint—spanning internal applications, partner integrations, and public platforms—the complexity of managing these interfaces at scale becomes evident.

A shared API lifecycle model and vocabulary offers a structured foundation to mitigate this complexity. By establishing common terms, phases, and expectations across the organization, it becomes easier to govern APIs effectively, streamline collaboration across teams, and promote best practices from design through to deprecation. Developers gain clarity, product owners make more informed decisions, and governance bodies can enforce standards without stifling innovation.

Importantly, this article does not aim to prescribe a rigid or overly formalized methodology. The API lifecycle model presented here is intentionally adaptable—intended to catalyze alignment, spark discussion, and ultimately guide the creation of a lifecycle framework tailored to the unique needs of your enterprise. Every organization operates within different regulatory environments, technological stacks, maturity levels, and product cultures. Therefore, the real value of this model lies in its ability to be contextualized and adopted pragmatically, rather than as a one-size-fits-all template.

This model will explore the key dimensions that contribute to holistic API lifecycle management, including:

- API Lifecycle Phases: The progressive journey from initial discovery and design to testing, release, and retirement.

- API Lifecycle States: Defined states that reflect an API’s maturity, stability, and availability to consumers.

- API Version Management: Best practices for maintaining compatibility, evolution, and communication across releases.

- Design Tooling and Documentation Conventions: Foundational patterns for consistency, usability, and maintainability.

- Automation and CI/CD: How to integrate lifecycle governance directly into delivery pipelines to achieve velocity and control.

Together, these components form a comprehensive blueprint for scalable, secure, and high-impact API governance. Whether you’re modernizing legacy systems or launching greenfield digital platforms, a thoughtful and transparent API lifecycle model empowers teams to move faster, make better decisions, and build APIs that last.

API Lifecycle Phases

Understanding and managing the full lifecycle of an API is essential for building sustainable, secure, and consumer-centric API ecosystems. While it’s common to describe API lifecycles strictly in terms of production readiness (e.g., development, staging, and deployment), this approach is often too narrow for modern enterprise environments.

In reality, an effective API lifecycle model must bridge the gap between engineering processes and business objectives, aligning both technical readiness and user-facing value. This includes consideration for iterative feedback loops, stakeholder collaboration, compliance checkpoints, and the operational maturity of environments where APIs live (development, integration, testing, production, and retirement).

In the enterprise context, APIs don’t just transition from development to production—they evolve in a multi-dimensional space that includes:

- Business validation (Is this API solving the right problem?),

- Design maturity (Does this API conform to organizational patterns, semantics, and interoperability requirements?),

- Technical readiness (Is the API performant, secure, and scalable?),

- Consumer readiness (Are developers equipped with documentation, SDKs, and credentials to successfully consume the API?),

- Governance compliance (Does the API meet security, privacy, and regulatory standards?).

This complexity necessitates a structured view of the API lifecycle that incorporates both phases and gates, ensuring smooth progression from ideation to retirement while promoting visibility and quality assurance across teams.

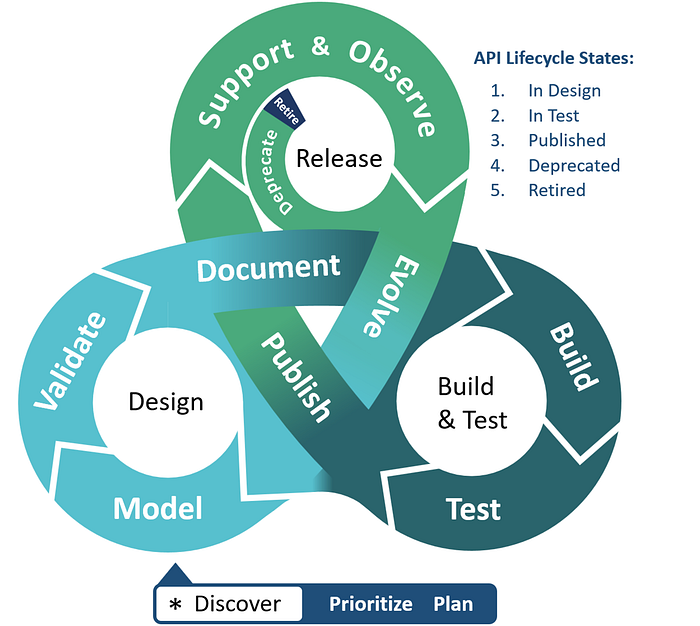

Above: A diagrammatic description of the phases of an API lifecycle.

The diagram illustrates the end-to-end journey of an API through key phases:

- Discovery – Capturing internal and external demand for new API capabilities and framing them into a prioritized design backlog.

- Design – Collaboratively modeling the API using domain-driven practices and generating OpenAPI specifications that serve as the source of truth.

- Build & Test – Creating API implementations based on the design spec, integrating continuous testing and security validation.

- Release – Publishing the API to catalogs, monitoring usage, handling client support, and managing feedback-driven iterations.

- Evolution – Incorporating learnings, usage trends, and consumer input to refine or extend the API.

- Deprecation & Retirement – Phasing out old versions in a controlled and consumer-aware manner.

Each phase is deeply interwoven with the others, and the lifecycle is rarely linear—APIs frequently loop back to earlier stages for rework or enhancement. This agile, feedback-driven approach is what distinguishes modern API lifecycle thinking from static software delivery models.

Starting Point

Before any lines of OpenAPI are written or endpoints deployed, the API lifecycle begins with a crucial but often overlooked phase: the starting point. This encompasses the preparatory work that ensures the API effort is not just technically sound, but strategically aligned with business needs and consumer expectations.

Discovery

Discovery is technically outside the formal lifecycle, but functionally it is the true origin of API development. This is where the demand is identified—either internally (via product teams, engineering roadmaps, or operational gaps) or externally (via customer feedback, partner integrations, or market analysis).

Discovery includes several key activities:

- Conducting capability mapping to determine where APIs can expose valuable business services.

- Stakeholder interviews to align on high-level use cases, pain points, and user journeys.

- Backlog creation and prioritization, where features and endpoints are scoped and sequenced based on business impact, feasibility, and urgency.

- Performing a feasibility and readiness check, asking: Do we have the necessary domain models? Do we understand the data contracts? Is the owning team ready?

The goal of discovery is not to design the API, but to establish a clear, validated problem statement and a roadmap that sets the stage for design with confidence.

Evolution

At the opposite end of the lifecycle, evolution refers to the continuous refinement and learning that occurs after an API is released—often as a result of real-world use. Once an API is live, it becomes a dynamic interface, constantly interacting with clients, generating telemetry, surfacing edge cases, and revealing unmet needs.

Evolution is driven by:

- Usage analytics – Is the API being used as expected? Which endpoints are most/least popular?

- Consumer feedback – Are developers struggling with onboarding, misunderstandings, or bugs?

- Change impact analysis – Are current clients resilient to schema extensions, or is the model brittle?

- Governance reviews – Are policy or security audits triggering the need for remediation or structural change?

The outcome of this observation phase may be non-breaking improvements, such as adding optional fields or extending functionality. However, in some cases, it may require breaking changes, prompting a version bump or new iteration of the design phase.

Evolution is not optional—it is the lifeblood of healthy APIs. APIs that don’t evolve stagnate, lose relevance, and ultimately degrade the developer experience.

Taken together, discovery and evolution are the outer bounds of the API lifecycle. They remind us that APIs are not just build-and-release assets, but living products with strategic inputs and post-launch responsibilities. A thoughtful starting point sets the tone for success, and a commitment to evolution ensures APIs remain valuable over time.

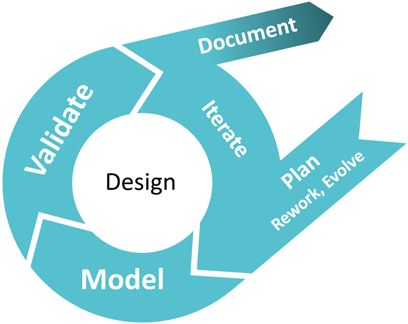

Design

The design phase is the intellectual heart of the API lifecycle. It is where ideas and business needs are transformed into concrete, testable, and reusable specifications. Far from being a purely technical process, API design is a collaborative and iterative act that draws from domain expertise, user feedback, security policies, and system constraints. A well-structured design phase reduces downstream rework, improves the developer experience, and aligns business capabilities with digital products.

1. Model

The first step is domain modeling, which establishes the foundation for everything that follows. This is not merely about drawing boxes and arrows—it is about capturing business concepts and workflows in a structured form that both humans and machines can interpret.

Domain modeling should be:

- Collaborative, involving product managers, domain experts, developers, and architects.

- Version-controlled, allowing changes to be tracked, discussed, and reverted as needed.

- Tool-supported, using platforms that encourage visual modeling, JSON schema generation, and alignment with standards like REST and OpenAPI.

This model represents the real-world entities, behaviors, and constraints of the business domain. It informs not only the API structure, but also the data models, validation logic, and overall interaction patterns. Critically, it ensures that the API speaks the same language as its consumers.

📖 More: Where Do Business Resource APIs Come From?

2. Validate

Once the model is in place, it must be validated through feedback. This is where the domain model becomes a Ubiquitous Language—a shared vocabulary that bridges the gap between technical and non-technical stakeholders.

Validation involves:

- Walkthroughs with domain experts, to confirm the model reflects business reality.

- Review sessions with architects, to ensure structural integrity and alignment with platform standards.

- Stakeholder sign-off, capturing agreements on field names, data types, constraints, and relationships.

The goal here is not perfection, but alignment and clarity. Early validation shortens feedback loops, prevents rework during implementation, and helps teams identify risky or ambiguous design choices before they become technical debt.

🧠 Reference: Ubiquitous Language – Martin Fowler

3. Document

Once the model is validated, it is time to generate specifications and artifacts. This includes:

- JSON Schema, which defines the structure, constraints, and validation rules for payloads.

- OpenAPI Specification (OAS), which formalizes the operations, paths, parameters, request/response structures, and security schemes of the API.

These specifications serve as the source of truth for both implementation and consumer onboarding. At this point, decisions should also be made about:

- Naming conventions

- Error codes and messages

- Versioning schemes

- Security requirements

Documentation must align with internal API standards, comply with governance policies, and be sufficiently descriptive to enable self-service use by client developers. This is the phase where abstract models become real interfaces—design contracts that development teams must fulfill and consumers will depend on.

The design phase is not just about aesthetics or structure—it is about establishing a shared understanding of what the API does, why it matters, and how it should behave. Skipping or rushing this step leads to inconsistent APIs, increased rework, and diminished consumer trust. When done well, it becomes the cornerstone of scalability, reuse, and developer delight.

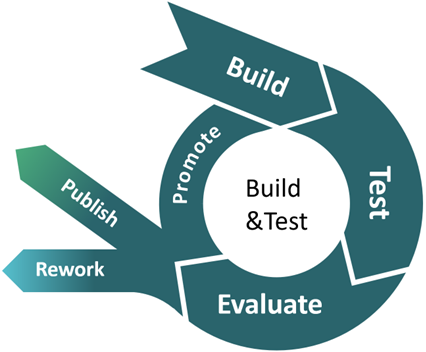

Build & Test

The Build & Test phase transitions the API from a conceptual contract into a working, testable, and secure implementation. This stage is where design specifications meet executable code, and operational integrity is validated against real-world conditions. It is one of the most critical phases in the API lifecycle, where rigorous quality assurance practices are employed to ensure that the API is functional, secure, performant, and adheres to enterprise standards.

1. Build

The starting point of the build phase is the OpenAPI specification and JSON Schema generated during the design process. These artifacts serve as the authoritative contracts that guide implementation.

The build activities include:

- Scaffolding the API implementation from the OpenAPI spec using tools that generate boilerplate code and client/server stubs.

- Applying security patterns and design standards, such as authentication mechanisms (OAuth2, JWT), input validation, and rate limiting.

- Writing business logic that fulfills the defined operations, while respecting bounded context responsibilities.

- Deploying to early-stage environments such as sandbox, developer, or integration test environments. These environments simulate production but often rely on stubbed or mock backends.

Early builds are often integrated with mock services that allow teams to validate endpoint behavior, parameter expectations, and response payloads before backend systems are available. This encourages parallel development, reducing overall cycle time.

Throughout the build, engineering teams should treat the specification as a non-negotiable contract. Any deviation must result in a specification update followed by stakeholder review to maintain integrity across teams.

2. Test

Testing transforms a functional build into a production-grade service. This phase involves a combination of automated and manual tests that verify not only correctness but also compliance, security, and performance.

Key aspects of this phase include:

- Continuous Integration (CI) pipelines that automatically run unit, integration, and regression tests upon each commit.

- Policy-as-Code validation, where CI tools inspect the OpenAPI spec for violations of corporate policies—such as naming conventions, security headers, and data classifications.

- Security validation, including OAuth2 flow correctness, token expiration behavior, input sanitization, and adherence to OWASP Top 10 guidelines.

- Performance testing, which assesses throughput, latency, and error handling under load using synthetic traffic.

- Linting and specification checks, to ensure the API contract adheres to OpenAPI standards and enterprise governance.

Many organizations employ test doubles such as mocks, fakes, and simulators to replicate upstream or downstream dependencies. This decouples testing from system dependencies and accelerates test execution.

3. Evaluate

Once tests are complete, the API enters an evaluation checkpoint—an inflection point to determine whether it is ready to progress toward production or requires rework.

Evaluation criteria may include:

- Test coverage thresholds and pass/fail status from automated test suites.

- Client developer feedback, gathered via internal alpha testing or partner previews.

- Analytics and usage telemetry, collected from test environments to assess endpoint performance and call patterns.

- Security audit reports, particularly if the API handles regulated or sensitive data.

Based on the evaluation, one of two actions is taken:

- Promote — The API is deemed production-ready and is published to a higher environment (e.g., UAT, staging, or production).

- Rework — Defects, feedback, or architectural issues require the team to return to design or build activities before retesting.

In mature organizations, this entire evaluation and promotion process may be fully automated via CI/CD pipelines. Approvals may be based on passing test thresholds, governance validations, and code review signoffs. This level of automation supports continuous delivery while maintaining compliance and quality.

📖 More: Managing API Lifecycles with Model Driven, Integrated DevOps

The Build & Test phase is not just a technical milestone—it’s the bridge between concept and customer. It ensures the API is more than just syntactically correct; it must be reliable, secure, performant, and usable. The practices established here determine whether the API earns the trust of internal developers, partners, and external consumers in production environments.

Release

The Release phase is where an API transitions from internal development and testing into full-scale operation. It is the stage that marks an API’s entrance into the hands of real consumers—whether internal teams, external partners, or public developers. This phase ensures operational readiness, manages communication and change, and sets the groundwork for long-term support.

1. Publish

The first step in this phase is the official publication of the API. After passing rigorous testing, security assessments, and governance gates, the API is deployed to the production environment and made available to consumers.

Publishing may involve:

- Making the API available in one or more catalogs: internal, partner-only, or public.

- Registering the API with API Gateway and management platforms, including metadata such as version, description, and access policies.

- Ensuring discoverability by listing it in a developer portal with full documentation, usage examples, SDKs, and onboarding instructions.

- Applying rate limiting, security enforcement, and throttling policies at the edge.

If the newly published API supersedes a prior version, that version is now deprecated (see below). This allows consumers to begin migrating to the newer interface while maintaining continuity.

Publishing is more than deployment—it is about making an API consumable. A production-grade API must be understandable, accessible, and trustworthy.

2. Support & Observe

Once in production, the API enters an operational phase where monitoring, support, and feedback collection are vital.

Key practices include:

- Real-time observability and telemetry: latency, error rates, usage patterns, request volumes.

- Integration with incident management and alerting systems, including automated rollback triggers for critical failures.

- Ongoing support via API portals, with channels for feedback, support tickets, issue reporting, and community engagement.

- Periodic performance and security audits to ensure the API remains performant and secure under load.

This is also the phase where client engagement becomes essential. Usage analytics and direct feedback inform future enhancements or bug fixes. Observability data can highlight endpoints that are underperforming or unused—helping guide product decisions and retirement planning.

3. Deprecate

Over time, APIs evolve. When a new major version is published with breaking changes, the older version enters the deprecated state.

Deprecation is not an abrupt shutdown—it is a planned transition period that ensures consumers have time to migrate. During this period:

- The deprecated version remains available and supported, but no longer receives feature updates.

- New consumers are blocked from subscribing to the deprecated API.

- A retirement date is clearly communicated in documentation and via platform notifications.

- Consumers are encouraged to cut-over to the new version through migration guides, SDK updates, and testing environments.

Properly managing deprecation helps maintain API integrity without consumer disruption. It allows teams to iterate and modernize their APIs while honoring past commitments.

4. Retire

After all consumers have migrated—or when the announced retirement date is reached—the API is formally retired.

Retirement actions include:

- Disabling or unpublishing the API from the catalog.

- Shutting down endpoints and removing configuration from gateways and routers.

- Updating documentation and changelogs to indicate removal.

- Returning a clear error code (e.g., HTTP 410 Gone) for any calls to the retired API.

- Archiving logs and analytics related to the version, for historical traceability and compliance.

While deprecated APIs are still active, retired APIs are completely inaccessible. The system must ensure there are no accidental consumers still relying on the retired interface.

In some cases, API retirement is temporary and reversible (e.g., for internal interfaces), but in regulated environments or customer-facing platforms, it may be final and subject to audit. Therefore, change control and communication must be airtight.

The Release phase is where the rubber meets the road. It requires discipline in operations, empathy for consumers, and precision in execution. A well-governed release process balances innovation with stability—allowing APIs to evolve confidently without jeopardizing the user experience or system integrity.

Security is Embedded — Not a Step

It is critical to understand that securing APIs is not a discrete or isolated activity—it is an integrated and continuous discipline embedded throughout the entire API lifecycle. Unlike traditional approaches where security is often treated as a final gate before production, modern API security is proactive, layered, and woven into every phase of design, development, deployment, and retirement.

A secure API lifecycle begins at inception and is reinforced at every decision point—from modeling the domain and defining schemas to deployment and observability. Security is not something to be “checked off” at the end; it is an ongoing commitment to protecting data, enforcing policies, and preserving trust.

Here’s how security manifests across the lifecycle:

-

Modeling Phase — Classify Data and Identify Controls

During domain modeling, it’s essential to understand what kind of data the API will expose or process. This includes identifying Personally Identifiable Information (PII), payment information, regulated content, or intellectual property.

Once data types are classified, the appropriate regulatory controls (GDPR, HIPAA, PCI DSS, etc.) must be identified. These decisions shape everything from access policies to encryption requirements. -

Development Phase — Apply Secure Design Patterns

Secure development is guided by well-established API design standards and security patterns. These include:- Proper authentication and authorization (OAuth 2.0, OpenID Connect)

- Input validation and sanitization to prevent injection attacks

- Least privilege access principles

- Rate limiting and quota enforcement

The goal is to build security into the design, not bolt it on later.

-

Source Control Integration — Specification Linting and Policy Enforcement

As OpenAPI specifications are created or updated, linting tools are applied automatically through CI workflows to catch violations of security policies or inconsistent schema definitions.

Tools like Spectral or custom rule sets ensure that required security definitions (e.g.,securitySchemes) are present and conform to standards.

This guards against weak configurations or accidental omissions—before they ever reach test environments. -

Gateway-Level Enforcement — Runtime Security Policies

Once APIs are deployed to test and production environments, they pass through managed API gateways that enforce runtime security controls.

This includes:- Token validation

- IP filtering and geo-blocking

- Payload inspection

- DDoS mitigation

- SSL/TLS enforcement

Gateways act as the first line of defense—ensuring that only well-formed, authenticated, and authorized traffic reaches backend services.

-

Testing Phase — Automated and Targeted Security Validation

Security testing goes beyond functional validation. Modern pipelines integrate OWASP API Security Testing, including:- Broken object level authorization (BOLA)

- Excessive data exposure

- Lack of rate limiting

- Broken function level access control

In addition to automated scanners, targeted penetration testing against high-risk endpoints ensures that emerging threats are considered.

Integration with runtime security analytics platforms also provides feedback loops into test coverage and blind spots.

Ultimately, API security must be treated as a first-class citizen in the lifecycle. The complexity and exposure surface of modern APIs—especially in open, partner, or public-facing systems—demands a continuous security mindset.

By embedding security at each stage, from design to retirement, organizations not only reduce risk, but also increase confidence—among developers, stakeholders, and external consumers alike.

More on embedded API security → API Bites – Tactics to Secure Sensitive APIs

API Lifecycle States

Understanding API lifecycle states is crucial for both providers and consumers, as these states signal the stability, availability, and long-term support expectations of an API. Lifecycle states communicate the readiness of an API to be consumed, whether it is evolving, stable, or at the end of its usable life. By clearly defining and communicating these states, organizations foster transparency, predictability, and trust across internal and external consumers.

Each state represents a specific stage in the evolution of an API and helps guide consumer behavior accordingly.

In Design

APIs in the In Design state are actively being modeled and discussed. They are usually not implemented yet or only exist as a prototype. This phase is exploratory, collaborative, and flexible:

- Feedback is actively encouraged from stakeholders, product teams, and early adopters.

- APIs may change significantly and without notice, including structure, semantics, or behavior.

- Consumer expectations should be limited to understanding direction and intent, not building stable integrations.

This is often when OpenAPI specifications are first drafted, and collaborative design platforms are leveraged to align developers, architects, and business domain experts.

In Test

APIs that have moved to the In Test state are implemented and deployed to lower environments such as development, sandbox, or staging.

- These APIs are considered functionally complete but may still undergo changes.

- Testing focuses on functionality, performance, security, and integration stability.

- Breaking changes are still possible, especially based on consumer feedback.

- Select consumers may use the API in pre-production use cases to validate integration paths or prototypes.

This phase is ideal for partner early access, UX feedback, and preparing documentation for broader release.

Published

The Published state signifies that the API has passed all required reviews, testing, and stakeholder approvals and is now officially released to production.

- It is considered stable, actively maintained, and safe for use in production environments.

- All consumers—internal teams, partners, or public developers—can confidently build and deploy services that rely on it.

- Any changes will be made in a backward-compatible manner, unless a new major version is introduced.

Published APIs are typically listed in an API portal or catalog, complete with documentation, SDKs, and onboarding workflows.

Deprecated

An API enters the Deprecated state when it is being phased out in favor of a newer version or better alternative.

- Existing consumers can still use it, but it is no longer being enhanced.

- New subscriptions are typically blocked to prevent additional adoption.

- Consumers are notified of deprecation and advised to migrate to newer APIs.

- A retirement schedule is communicated to ensure time for transition.

Deprecation is a critical signal that client teams should plan ahead, as continued reliance on a deprecated API may introduce long-term risk.

Retired

The Retired state means the API has been fully removed from service:

- All traffic to this API results in gateway errors (e.g., HTTP 410 Gone).

- The API is no longer documented, available in portals, or supported by the platform team.

- Any system still attempting to call this API is likely misconfigured or out-of-date.

APIs in this state should be removed from developer portals, SDKs, and monitoring dashboards, and all internal systems should be validated to ensure they’ve migrated off.

Why Lifecycle States Matter

Lifecycle states serve as a contract between providers and consumers. They help prevent miscommunication, manage expectations, and reduce operational risk:

- Developers know which APIs are ready for production.

- Product managers can plan migrations and roadmap dependencies.

- Security teams can focus controls on actively used APIs.

- Governance teams can ensure deprecation and retirement are executed with discipline.

Communicating lifecycle states clearly—and enforcing them programmatically through API management platforms—is essential for scaling modern API ecosystems.

Managing API Versions

Managing API versions is a cornerstone of effective API lifecycle governance, especially in enterprise environments where consistency, stability, and backward compatibility are paramount. As business needs evolve, so do the capabilities and designs of APIs that power critical digital services. However, these improvements must be made in a way that minimizes disruption to existing consumers and provides a clear path forward.

While the ideal state is often to maintain a single version of an API to reduce complexity, the reality of continuous delivery and iterative improvement necessitates version management. Even the most carefully designed APIs will eventually encounter the need for breaking changes—whether to introduce a more expressive data model, enhance performance, correct a design flaw, or realign with shifting business requirements.

To manage this, API versioning strategies provide the mechanism to:

- Introduce new capabilities or behavior without breaking existing integrations.

- Offer parallel support for multiple consumer groups, each at different stages of adoption.

- Allow for structured deprecation and retirement, giving clients time to adapt.

- Signal semantic meaning and change scope through consistent versioning rules.

Supporting multiple API versions can add operational and maintenance overhead, but this is often a necessary tradeoff to avoid a ‘big-bang’ migration scenario where all consumers must update at once. Instead, organizations should strive for a versioning strategy that is predictable, easy to understand, and enforced via automation where possible.

An effective versioning model acts as a bridge between innovation and stability, allowing teams to evolve APIs at their own pace while maintaining strong backward compatibility guarantees. This ensures that API providers can keep innovating and iterating, while consumers maintain confidence in their integrations and system behavior.

In the sections that follow, we’ll explore recommended versioning mechanisms, semantic versioning conventions, and best practices for managing major version upgrades and deprecations in a seamless, consumer-friendly manner.

Versioning APIs

Versioning is a critical practice in API lifecycle management, serving as a contract between API providers and consumers. It enables developers to introduce changes and improvements without breaking existing integrations. Among the most common strategies for API versioning—particularly in RESTful APIs—is the use of versioned URLs, which encode the version number directly into the URI path. This approach is simple, intuitive, and consumer-friendly, making it a preferred default in many specification-first, enterprise-scale API platforms.

A versioned URL typically includes only the MAJOR version number in the form v{MAJOR}, which communicates the scope of compatibility. For example:

/membership/v1/applicantsThis structure provides immediate visibility to consumers regarding the API version they are interacting with, ensuring clarity when documenting or debugging integration points. Importantly, the version number is placed before the resource name (never within the resource ID or path), promoting consistency in design and routing logic.

Why Limit the Versioning to the MAJOR Number?

Following Semantic Versioning (SemVer) principles, minor and patch changes should be backwards-compatible—meaning that client applications should not break when these changes are introduced. As such, exposing them in the URI creates unnecessary complexity and increases the likelihood of tight coupling between client logic and API infrastructure. By restricting visible version changes in the URL to only breaking (MAJOR) updates, the API design preserves compatibility while enabling seamless client upgrades.

Advantages of Versioned URLs

- Simplicity for clients: Easy to identify and switch between major versions.

- Server-side routing clarity: Gateways and routing middleware can quickly determine the correct backend handler.

- Static contracts: Changes to minor functionality can happen behind the scenes without client disruption.

Limitations and Trade-offs

While versioned URLs are straightforward, they are not the only option. Header-based versioning or content negotiation can provide greater flexibility but often at the cost of discoverability and tooling support. Thus, the versioned URL remains the most practical and least controversial approach in environments where clarity, tooling compatibility, and consumer trust are paramount.

Ultimately, the decision to use versioned URLs should be based on organizational context, consumer expectations, and the API management ecosystem in place. But for most specification-first strategies, embedding the major version in the URI continues to be a safe, effective, and widely adopted practice.

Versioning options and more advanced considerations are discussed in greater depth in the article: Versioning Managed APIs.

Semantic Versioning

Semantic Versioning (or SemVer) provides a clear and predictable framework for managing API versions, especially in large-scale enterprise ecosystems where stability, clarity, and backward compatibility are crucial. Following the Semantic Versioning specification, each API release is identified by a three-part version number structured as:

{MAJOR{.{MINOR{.{PATCH{

This format conveys precise information about the nature of changes introduced with each release, enabling API consumers to make informed decisions about when and how to upgrade their integrations.

Why Semantic Versioning Matters

In managed API environments—particularly those built on domain-driven and specification-first principles—semantic versioning serves not only as a version label, but also as a communication mechanism. It reflects intentional governance, allowing teams to align on release practices, minimize integration risks, and promote agility through automation.

Semantic versioning also facilitates intelligent tooling. Ideally, your domain modeling platform or API management solution should support automated versioning control across:

- Domain models

- OpenAPI specifications

- Mock servers

- Documentation

- CI/CD pipelines

Such integrated versioning helps maintain traceability across artifacts, reduces manual errors, and streamlines change approval workflows.

Versioning Rules Explained

The decision to increment a specific component of the version string—MAJOR, MINOR, or PATCH—should follow these rules:

-

MAJOR version is incremented when breaking changes are introduced. These are changes that will disrupt existing consumer integrations, such as removing fields, renaming operations, or altering response formats. A new MAJOR version implies a contract change and often requires consumer re-subscription and re-certification.

-

MINOR version is used when new features are added in a backward-compatible way. This could include adding optional fields, new operations, or support for new media types. MINOR version upgrades should not disrupt existing consumers but may offer them enhanced capabilities.

-

PATCH version is for backward-compatible bug fixes. These are changes that address defects without altering the API’s behavior or interface. PATCH-level changes are typically safe and require minimal consumer intervention.

Example

If an API evolves as follows:

- Initial release:

1.0.0 - Adds a new optional query parameter:

1.1.0 - Fixes a documentation typo:

1.1.1 - Removes an existing endpoint (breaking change):

2.0.0

By following this convention, both API providers and consumers share a common understanding of impact and can develop upgrade strategies accordingly.

Semantic versioning is more than a versioning system—it’s a governance framework embedded in every aspect of modern API lifecycle management. Embracing it ensures clarity, confidence, and consistency across your API ecosystem.

Backwards Compatibility

Backwards compatibility refers to the ability of an API to evolve without breaking existing client integrations. It is a critical principle in API lifecycle management, especially in environments where APIs are consumed by multiple external systems, partners, or teams. Maintaining backward compatibility ensures that existing consumers can continue to rely on their current integrations while the API evolves to support new use cases or features.

Examples of Backwards-Compatible Changes

These changes can safely be introduced under a MINOR or PATCH version increment:

-

Addition of a new field (non-mandatory): Introducing a new optional field to a response payload does not impact existing consumers, as their applications will simply ignore unrecognized fields.

-

Addition of a new operation: Adding a new endpoint or method expands the API’s capabilities without altering current functionality. Consumers who do not use the new operation are unaffected.

-

Addition or removal of HATEOAS links: Hypermedia links, which guide clients through the application via links in the response, are non-intrusive additions. Clients not utilizing HATEOAS links remain unaffected by these changes.

-

Support for a new media type: Providing responses in an additional format (e.g., supporting both

application/jsonandapplication/pdf) enables broader use cases without disrupting existing integrations.

These changes enable you to iterate and add value while preserving confidence and minimizing the need for coordinated releases or consumer migrations.

Examples of Breaking (Non-Backwards-Compatible) Changes

The following changes require a new MAJOR version because they alter the contract between the API and its consumers, introducing risk of failure:

-

Removal of fields from a response: Clients relying on these fields will break when the data disappears.

-

Change of field data types: Modifying a field from

stringtoboolean, for example, can cause type validation errors in consumer applications expecting a different format. -

Removal of an operation: Deleting an endpoint eliminates functionality relied on by consumers, rendering integrations unusable.

-

Removal of a media type: Dropping support for a response format (e.g., no longer returning

application/xml) affects clients expecting that format. -

New or tightened API security definitions: Introducing stricter authentication or authorization policies—such as requiring new headers or scopes—may prevent previously authorized requests from being accepted, effectively breaking consumer access.

Why This Matters

Understanding the boundaries between compatible and incompatible changes allows teams to:

- Protect consumers from unexpected disruptions

- Communicate versioning implications clearly

- Apply consistent versioning rules across development teams

- Maintain confidence in automated deployment and integration pipelines

Backwards compatibility is the cornerstone of stable API evolution. When in doubt, treat the consumer experience as sacrosanct and err on the side of non-breaking enhancements. This philosophy enables agile development without sacrificing trust.

Test Environment Break-Fixes

In a well-governed API lifecycle, major version increments are reserved for production-deployed APIs that introduce breaking changes — such as changes to payload structure, endpoint removal, or stricter security requirements. However, real-world development often requires nuanced handling of pre-production APIs that may still be undergoing iterative feedback and validation. This is especially true in integrated test environments, where APIs are actively consumed by internal teams, partner applications, or staging integrations.

When a breaking change is identified in a pre-release API — for example, to fix a logic bug, refine a data model, or realign behavior with business rules — introducing a major version bump may be premature or even counterproductive. Why? Because the API hasn’t yet reached a state of general availability, and a major version increment could create unnecessary complexity in version management, consumer onboarding, and test data synchronization.

Instead, the recommended approach is to:

- Increment the MINOR version number while maintaining the same MAJOR version (e.g., from

v1.2tov1.3). - Clearly document the nature of the change, especially if it introduces breaking behavior within the test environment.

- Use API analytics and telemetry tools to identify active consumers of the current version (e.g., test teams, QA suites, automated testers).

- Notify those consumers proactively, explaining the nature of the change, its implications, and the expected migration path or update window.

This strategy strikes a balance between honoring versioning discipline and enabling agile iteration. It acknowledges that early-stage APIs are more fluid and allows development teams to remain responsive to test feedback without causing downstream confusion with premature versioning semantics.

Moreover, this approach reinforces the importance of having environment-specific versioning policies and robust observability across environments. By treating staging and testing environments as first-class citizens in your lifecycle, you improve the overall maturity and reliability of your release management process.

Replacing a Major API Version

Managing the transition to a new major API version is one of the most complex and sensitive operations in the API lifecycle. It has far-reaching implications for consumers, developers, platform teams, and business stakeholders. To ensure success, the process must be deliberate, transparent, and well-communicated. Below are the key principles and activities to consider when introducing a new major version of an API.

Justify, Document, and Socialize

Before deciding to introduce a major version, strong justification is essential. Major version changes indicate breaking changes—modifications that are incompatible with existing consumers. These could include changes to request/response schemas, removal of operations, security policy tightening, or business logic rewrites. Given the potential disruption, API owners must first explore all alternatives, such as backward-compatible extensions, additive changes, or version-less enhancements.

If breaking changes are deemed necessary, the decision must be documented formally as part of an Architecture Decision Record (ADR). This ensures traceability and alignment with enterprise standards. API owners should socialize the decision in agile planning forums, architecture boards, or stakeholder syncs. The goal is to drive early awareness, reduce surprises, and ensure support teams are ready for the operational impact.

Decouple Cut-over

Every major version of an API is effectively a new contract. It must be published as a distinct entity in your API gateway or catalog. Existing consumers do not automatically migrate—they must opt-in by subscribing to the new version and updating their integrations. This “opt-in” behavior supports decoupled cut-over, allowing each consumer to migrate on their own schedule.

During this transition, both versions must be maintained:

- The previous version should be deprecated (no new subscriptions allowed).

- Existing consumers must be monitored for migration progress.

- Platform teams should prepare to manage multiple active versions simultaneously, which can increase operational overhead and complexity.

Clear documentation, version tagging, and consistent developer portal communication are essential to prevent confusion.

Communicate a Retirement Schedule

The success of a major version cut-over depends heavily on effective communication. Once a new version is published and the previous version is deprecated, API owners must:

- Identify active consumers of the deprecated version using analytics and subscription data.

- Send targeted notifications outlining the upcoming retirement.

- Provide documentation, migration guides, code samples, and timelines.

Ideally, the retirement schedule is published in advance (e.g., “v1 will be retired on YYYY-MM-DD”), with reminders issued periodically. Most API management platforms support automated deprecation notices via portal notifications or webhook alerts.

Retire and Remove the API

Once all consumers have migrated — or the retirement deadline is reached — the API can be retired. In retirement:

- All subscription access is revoked.

- Calls to the retired endpoint return a 4xx or 5xx error (e.g., HTTP 410 Gone).

- The API is removed from catalogs or flagged as inactive.

Importantly, retirement is reversible. If unexpected fallout occurs, access can be re-enabled. In contrast, removal is a permanent deletion of the specification, deployment artifacts, and gateway policies. Removal should only occur after retirement has been stable and uneventful, and is typically subject to a formal change control process.

By treating major version replacements as strategic lifecycle events—rather than simple code deployments—organizations can minimize disruption, build consumer trust, and scale their API programs responsibly. Major version management is not just a technical task; it’s a disciplined, cross-functional process of change leadership.

Design Tooling and Documentation

API Documentation

High-quality API documentation is a foundational element of any successful API program. It serves as the contract between the API provider and its consumers, enabling developers to understand how to integrate, test, and use APIs effectively and with minimal friction. Poor documentation leads to failed integrations, increased support overhead, and reduced adoption. Comprehensive, consistent, and accessible documentation, on the other hand, accelerates development and fosters a better developer experience.

The OpenAPI Specification (OAS) has become the de facto industry standard for describing RESTful APIs. It is a language-agnostic, machine-readable format that enables seamless integration with a wide array of tools—including API gateways, developer portals, automated testing suites, and client code generators. Its broad ecosystem support means that developers can visualize, validate, simulate, and generate API interfaces without ever writing a line of backend code.

Best Practices for OpenAPI Adoption

To fully leverage OpenAPI’s capabilities, organizations should:

- Adopt the latest stable version of OAS (e.g., 3.1.x) to benefit from enhancements in schema modeling, content negotiation, and security definitions.

- Ensure compatibility with your chosen API Management platform. Some commercial gateways offer extended support for certain OAS features, while others may lag behind—so test compatibility with both gateway policies and developer portal renderers.

- Maintain versioning best practices. The

info.versionfield must include the full semantic version number to support automation and traceability.

info: title: Membership API version: 1.1.2Enhance Developer Experience with Examples

Every operation in the OpenAPI specification should include rich examples for both requests and responses. These examples:

- Act as live documentation for developers who want to experiment quickly.

- Reduce the trial-and-error learning curve for new API consumers.

- Ensure consistent expectations across teams, especially when multiple consumers are integrating with the same endpoints.

Enforce Linting Rules for Quality and Consistency

To maintain consistency across teams and reduce ambiguity, organizations should adopt linting rules for OpenAPI documents. These rules enforce style and governance policies on aspects such as:

- Naming conventions for endpoints and parameters

- Required fields and response structures

- Use of standardized error formats

- Security scheme requirements

Spectral is a powerful tool for defining and enforcing OpenAPI linting rules. Organizations can start with community rule sets and evolve them based on internal standards and domain-specific requirements.

Linting can be integrated directly into CI pipelines, enabling automated feedback during pull requests. This ensures that only conformant API specifications are allowed to progress through the lifecycle, reducing downstream quality issues and promoting maintainability.

API documentation is not an afterthought—it’s a strategic asset. The use of OpenAPI Specification empowers organizations to:

- Automate parts of the development lifecycle

- Improve collaboration between developers and consumers

- Standardize how APIs are described, versioned, and consumed

A strong documentation foundation built on OAS, coupled with automated linting, delivers on the promise of developer self-service, consistency, and scalability—all essential qualities for modern API ecosystems.

More on OpenAPI documentation and best practices can be found here:

API Documentation Rules — Improving the Quality and Consistency of APIs with OpenAPI Documentation and Linting Rules

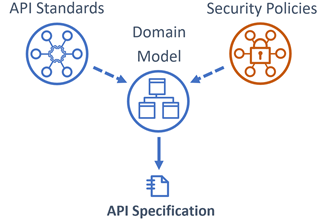

Domain-Driven API Design Practice

A robust domain-driven API design practice is foundational for creating scalable, maintainable, and business-aligned APIs. This practice ensures that APIs are not only technically sound but also deeply aligned with the core concepts of the business domain they represent.

At the heart of domain-driven design is the domain model—a conceptual representation of the core business logic, processes, and rules. The model functions as a Ubiquitous Language, a term coined by Eric Evans and emphasized by Martin Fowler in his description of Bounded Contexts. This language bridges communication between developers, product managers, and domain experts. When everyone speaks the same language around business entities and actions, alignment improves, feedback loops shorten, and misunderstandings decrease.

Once the domain model is established, it becomes the source of truth from which API specifications are derived. These specifications must conform to organizational API design standards and security policies. Depending on organizational maturity, the specifications may be:

- Tool-generated, using model-driven platforms that enforce consistency and versioning

- Template-based, using standard scaffolds approved by the API Center of Excellence

- Hand-crafted, for edge cases requiring bespoke definitions

Regardless of the method, peer review is essential. It ensures correctness, completeness, and adherence to design guidelines.

Example: API specifications are generated directly from the domain model using design tools.

A mature domain-driven API design practice also supports source control integration, enabling traceability of model changes, API updates, and consumer impacts. These tools should ideally handle semantic versioning of models and derived artifacts, streamlining the governance and change management processes.

Incorporating model-driven development and design discipline reduces rework, enforces architectural alignment, and facilitates automation. This leads to high-quality, coherent, composable APIs that evolve gracefully with the business.

For deeper guidance, see:

Managing API Lifecycles with Model Driven, Integrated DevOps

API Design Practice: A practical guide to API QA and the design of stable, coherent and composable business resource APIs

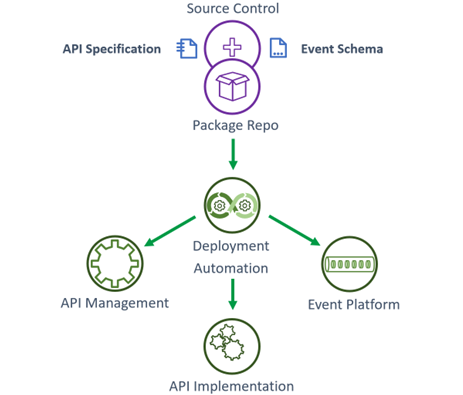

Vertically Integrated DevOps

In modern API-first and microservices-based environments, delivering business functionality quickly and reliably depends on seamless coordination between development, operations, and architecture teams. However, many enterprises still face significant friction when integrating updated APIs into production systems. This friction often stems from disconnected tooling, manual handoffs, siloed governance processes, and a lack of automation.

Vertically Integrated DevOps is an architectural and operational approach that eliminates these pain points by tightly coupling domain modeling, API design, implementation, testing, and deployment through a unified toolchain and automated pipelines. Rather than treating APIs as artifacts tacked onto software delivery at the end, this method treats them as first-class citizens, integrated from the earliest stages of design.

The approach begins with model-driven development—using collaborative tools to define domain concepts, data schemas, and interactions. These models are not static diagrams; they serve as living assets that generate API specifications, security policies, and configuration templates. These assets are committed to source control and form the blueprint for everything downstream.

From there, CI/CD pipelines automatically generate test scaffolds, validate OpenAPI definitions via linting tools like Spectral, enforce security and compliance policies, and trigger gateway deployments. Deployment artifacts (e.g., API proxies, gateway configs) are built and versioned alongside the core service code, ensuring alignment.

Benefits of this vertical integration include:

- Consistency: APIs deployed to gateways exactly match the design approved and tested in lower environments.

- Speed: Frictionless propagation of changes from domain model to production accelerates delivery.

- Traceability: Every API change can be traced back to a domain model version, supporting audit and rollback.

- Autonomy: Product teams can deploy, test, and evolve their APIs without waiting for centralized governance reviews.

By collapsing the traditional separation between architecture, development, and DevOps, Vertically Integrated DevOps empowers organizations to deliver high-quality, secure, and business-aligned APIs with confidence and speed. It is a cornerstone capability for enterprises pursuing API economy participation, microservices at scale, and platform thinking.

A Single Repository

One of the most effective ways to reduce complexity, maintain consistency, and streamline automation in API lifecycle management is to co-locate API specifications and configuration files with the service implementation in a single, unified repository. This repository becomes the source of truth not only for the business logic, but also for the interface definition, access policies, deployment scripts, and observability instrumentation.

By housing everything in a single repository, developers, testers, security engineers, and DevOps teams can collaborate around the same versioned artifacts. This setup enables clear traceability from domain model to code, from API spec to deployment configuration. It ensures that updates to the API interface are synchronized with corresponding changes in service logic, policy enforcement, and documentation.

A unified repository also enables automated CI/CD pipelines to trigger from a single source of change. When a developer pushes a change to the API specification or service code, the pipeline can automatically:

- Lint and validate the OpenAPI document

- Generate SDKs or client libraries

- Build and test the service

- Deploy the API gateway proxy configuration

- Register the updated API with an API portal or catalog

- Trigger end-to-end integration and security tests

This level of automation reduces manual overhead and eliminates the risk of API drift—where what’s implemented in production diverges from what’s documented or intended.

In large organizations, this pattern can be extended with monorepos (a single repository for multiple services) or multi-repo orchestration via GitOps workflows. But regardless of scale, the principle remains: keeping code and configuration together improves delivery velocity, enforces policy compliance, and strengthens operational integrity.

CI-CD Pipeline Enrollment

Enrolling APIs into a Continuous Integration and Continuous Deployment (CI-CD) pipeline is a pivotal step in realizing a modern, scalable API delivery model. CI-CD enables repeatable, automated, and consistent progression of APIs from design through to deployment, while embedding critical checks at every stage.

To ensure successful pipeline enrollment, enterprise-endorsed DevOps tools and platforms must be clearly documented and integrated into API lifecycle guidance. These tools include:

- CI engines (e.g., GitHub Actions, GitLab CI, Jenkins, CircleCI)

- CD platforms (e.g., Argo CD, Spinnaker)

- Infrastructure-as-Code tools (e.g., Terraform, Pulumi)

- Security scanning and policy enforcement (e.g., Spectral, Snyk, Checkov)

- Observability and alerting platforms (e.g., Prometheus, Grafana, New Relic)

Organizations should provide links to onboarding documentation, reusable pipeline templates, and credentialing policies to make it easier for teams to adopt the approved toolchain.

Environments and Maturity

Pipeline onboarding typically follows a maturity curve:

- Development environments (e.g., sandboxes, testbeds) often support rapid onboarding with fewer compliance gates. Here, the goal is to iterate quickly, get feedback, and validate assumptions.

- Staging and production environments, however, require elevated governance. APIs must meet a defined set of quality, security, and operational readiness criteria. This may include peer reviews, openAPI linting, test coverage thresholds, vulnerability scans, and successful deployments in upstream environments.

Governance and Autonomy

While automation is the goal, it must be accompanied by clear accountability structures. Autonomous pipelines should be treated as privileged assets. Teams that onboard a fully autonomous API CI-CD pipeline should be responsible for:

- Monitoring API quality metrics

- Responding to security incidents

- Managing versioning and backward compatibility

- Maintaining service-level objectives (SLOs) and SLAs

Governance models must balance developer autonomy with enterprise compliance, ensuring that teams can move fast without sacrificing reliability or violating policy. Approval workflows (e.g., for production releases) should be enforced where appropriate, and telemetry from the pipeline should feed back into governance dashboards for auditability.

By formalizing pipeline enrollment as a structured process—supported with templates, tools, and training—organizations can scale their API programs without creating bottlenecks or compromising standards.

Compliance with Security Controls and API Design Standards

In modern enterprise environments, ensuring that APIs are secure, consistent, and compliant from the outset is not just a best practice—it’s a business necessity. With the growing reliance on APIs to power microservices, integrations, and customer-facing applications, security and design standards must be embedded directly into the CI/CD pipelines through Policy-as-Code automation.

Policy-as-Code refers to the practice of defining security, governance, and design policies using declarative configuration languages (such as Rego for Open Policy Agent), which are then enforced by automation tooling. This means that instead of relying on manual reviews or ad hoc documentation, API governance becomes programmable, testable, and repeatable.

Automated Controls in the Pipeline

Once an API specification is committed to source control (often as an OpenAPI document), the CI/CD pipeline will automatically:

- Lint the API spec using tools like Spectral to ensure naming conventions, schema structure, versioning, and description completeness adhere to organizational standards.

- Enforce design rules, such as naming conventions for endpoints and parameters, required response formats, and adherence to standardized pagination or error-handling models.

- Check for security compliance, such as validating the presence and configuration of:

- Authentication schemes (e.g., OAuth 2.0, API keys)

- Authorization scopes

- Secure transport (HTTPS-only enforcement)

- Rate limiting and throttling policies

- Scan for sensitive data exposures in request/response payloads or logging practices.

- Block deployments if any policy violations are detected.

Benefits of Embedding Security and Design in the Lifecycle

Embedding these controls early in the lifecycle ensures that issues are caught before they reach production, dramatically reducing the risk of data breaches, non-compliant releases, and inconsistent developer experiences. Moreover, by eliminating manual gatekeeping in favor of automated checks, teams reduce cycle time and improve reliability.

This also builds confidence among stakeholders. Product managers can trust that new APIs meet enterprise branding and behavior expectations. Security teams know that no API will bypass required controls. Developers benefit from immediate feedback during pull requests, enabling them to fix issues quickly without navigating slow review loops.

Transparency and Auditability

A compliant pipeline also creates a paper trail for audits. Each API release is traceable, and the CI logs show which checks passed or failed—critical for industries with strict regulatory oversight such as finance, healthcare, and government. Versioned policies can evolve as the organization’s needs grow, enabling continuous improvement.

Ultimately, enforcing security and design standards through automation transforms compliance from a barrier into a strategic enabler—fostering speed, safety, and scale in API delivery.

Coordinated, Automated Deployment

As managed APIs are the interface to deployed microservices, DevOps tooling must ensure that new and updated interfaces and schemas generated by domain modeling tooling and implemented by a business service are published to relevant API management (and potentially event, security and distributed graph) platforms simultaneously with the deployment of the business service. Deployment and testing of APIs must be automated, domain-autonomous and as frictionless as possible.

When new versions of an API are deployed, automation must be able to detect and deprecate previous versions (no new subscribers allowed).

Vertically Integrated DevOps is discussed further in Managing API Lifecycles with Model Driven, Integrated DevOps

Registering API Resources with IAM

As APIs increasingly serve as the front door to business capabilities, data assets, and critical services, proper registration and governance of API resources with Identity and Access Management (IAM) platforms is no longer optional—it’s essential. An effective API lifecycle model must formally include steps to ensure that every newly created or updated API is properly registered with the enterprise’s security infrastructure to enable secure, auditable access control.

This process, often referred to as resource registration, involves formally cataloging the API’s capabilities and associating them with policies in the API Management platform, Security Token Service (STS), and IAM systems. It allows the organization to manage who can access what, under which conditions, and with what level of authorization.

Why Resource Registration Matters

-

Fine-Grained Access Control: Without resource registration, it becomes difficult to enforce role-based or attribute-based access controls on individual API endpoints. Registration makes it possible to scope access tokens and API keys precisely to permitted resources and operations.

-

Auditability and Compliance: Registered resources can be tracked, monitored, and logged by security infrastructure. This is critical for meeting regulatory and audit requirements (e.g., GDPR, HIPAA, PCI-DSS) where proving least-privileged access and data lineage is required.

-

Policy Enforcement: Policies related to data classification (e.g., public, internal, confidential), rate limits, and multi-factor authentication requirements can only be applied if the resource is registered and its classification is known to the enforcement point.

-

Service Discovery and Access Management: API gateways, service meshes, and developer portals often use the IAM registry to populate catalogs and enforce client entitlements. Without registration, APIs may be invisible or unprotected.

What Should Be Registered?

The resource registration process should capture and publish metadata such as:

- Resource Path and Method (e.g.,

POST /accounts,GET /orders/{id}) - Scope or Role Mappings (e.g.,

finance.write,admin.view) - Data Sensitivity Classification (e.g., public, confidential, restricted)

- Access Policy Bindings (e.g., OAuth 2.0 scope, client credentials, rate limits)

- Linked Consumer Applications and entitlements

- API Lifecycle Status (e.g., published, deprecated)

This metadata enables IAM systems to dynamically evaluate access policies during request authorization.

Integration with STS and API Management

Modern security token services (STS) such as those found in platforms like OAuth 2.0, OpenID Connect, or SAML-based SSO should be integrated with the API Management platform and the IAM registry. This allows access tokens issued by the STS to carry the appropriate scopes, claims, and entitlements aligned with the registered API resource metadata.

API gateways can then validate these tokens and enforce access controls in real time. If a resource is not registered, gateways may default to overly permissive or restrictive behavior—both of which can undermine security and usability.

Governance and Developer Guidance

An effective API lifecycle model must provide developers with clear guidance on how to register resources as part of the design and publishing workflow. This includes:

- Links to internal documentation and tooling for API resource registration

- Required metadata templates for resource declarations

- Approval workflows for sensitive or regulated data resources

- Integration steps with IAM and STS configurations

Where possible, automation should drive this process. Resource declarations can be embedded within OpenAPI specs or CI/CD pipelines to trigger registration with API gateways and IAM systems programmatically.

Registration of API resources is further discussed in Securing APIs with an Integrated Security Framework

The Lifecycle Is the Strategy

In an increasingly interconnected, API-first world, the ability to manage APIs with structure, discipline, and agility is a defining factor in digital success. A well-articulated API lifecycle model is not simply a procedural checklist—it is a strategic enabler that bridges the divide between innovation velocity and enterprise-grade governance.

By adopting a shared vocabulary and clearly defined lifecycle states, organizations can eliminate ambiguity and foster collaboration between architects, developers, security teams, product owners, and external partners. This common understanding allows for smoother transitions between stages, clearer handoffs, and fewer blockers across teams.

An effective API lifecycle model must be context-aware and adaptable—reflecting the realities of the organization’s technical architecture, security posture, compliance obligations, and speed-to-market goals. While the phases (Design, Build, Test, Release, and Retire) appear linear, the real-world application is iterative, feedback-driven, and continuous. APIs should not only be functional, but also discoverable, secure, versioned, governed, and performant at every stage of their existence.

Equally important is recognizing that API lifecycle management is not just a developer concern. It touches security, legal, DevOps, data governance, customer success, and business strategy. As such, the API lifecycle model should be institutionalized across the enterprise, supported by automation, integrated DevOps tooling, and championed by API governance bodies.

Final Thought

In the race to digitize, APIs are the arteries of innovation. But without a disciplined approach to their lifecycle, complexity mounts, security weakens, and developer experience suffers. An intentional, structured API lifecycle framework transforms chaos into clarity—giving organizations the operational maturity to scale securely, adapt rapidly, and build API ecosystems that endure.

Let the lifecycle model be the blueprint that empowers teams, secures data, and accelerates transformation. Design it with care. Evolve it continuously. And make it the backbone of the enterprise’s digital success.