Enhanced Semantic Cache

An improved semantic cache implementation with persistent storage, adjustable thresholds, and flexible embedders.

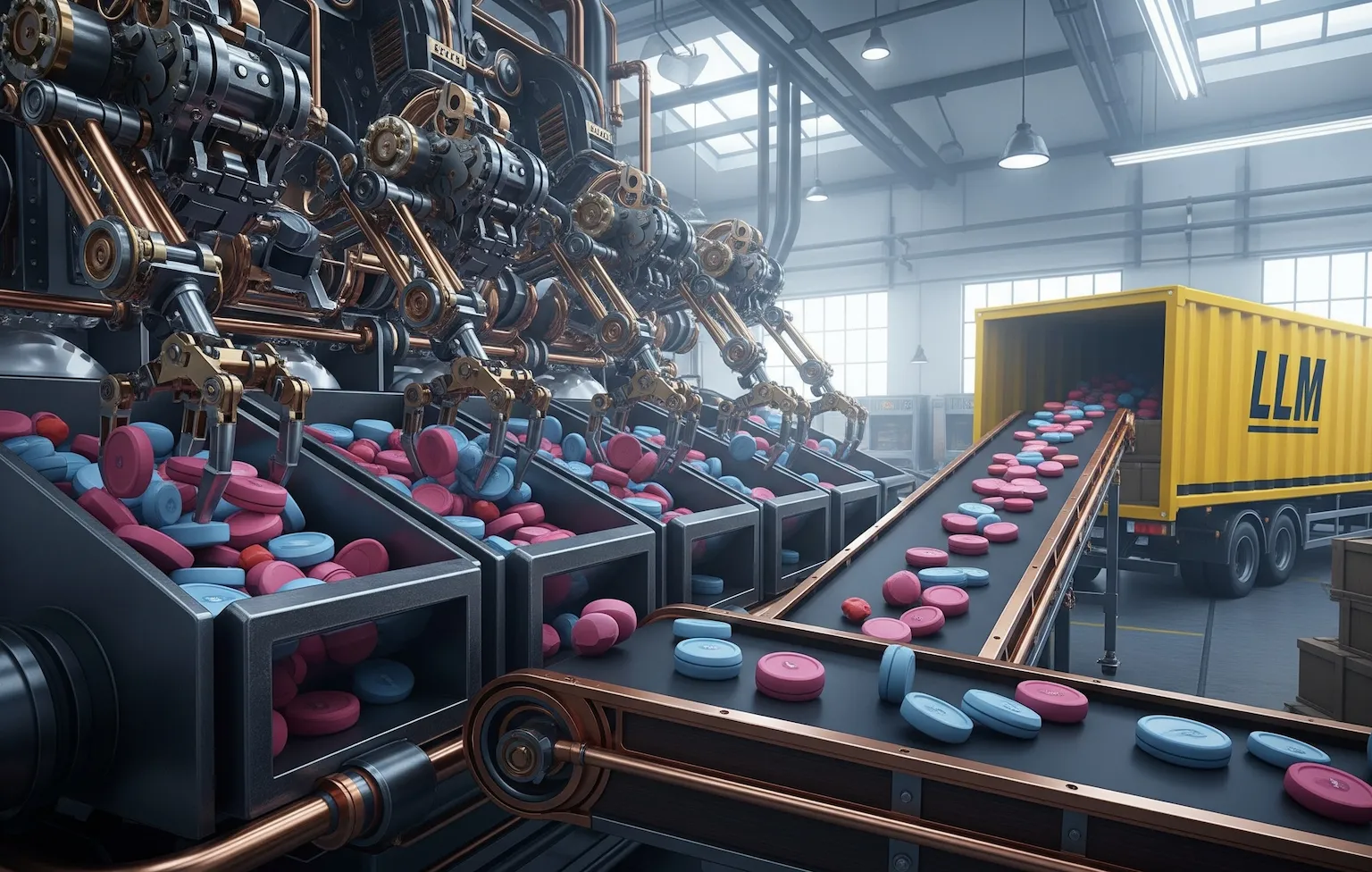

As the use of Large Language Models (LLMs) becomes more integrated into real-world applications—from customer support bots to intelligent retrieval pipelines—efficiency, cost control, and response quality have become critical concerns. One of the most effective ways to address these challenges is through semantic caching.

Following the initial release of my basic semantic cache, which served as an educational foundation and prototyping tool, I decided to introduce a more robust and production-aware version: the Enhanced Semantic Cache.

Why Enhance the Cache?

The basic version of the semantic cache was intentionally lightweight and minimalist—ideal for getting started with the concept of using embeddings to short-circuit repeated LLM calls. However, real-world systems demand more:

- Persistent storage across sessions

- Dynamic similarity thresholds

- Multiple embedding providers

- Rich metadata for insight and debugging

- Compatibility with vector databases

This enhanced version directly addresses those needs, offering greater scalability, flexibility, and observability while maintaining an intuitive and modular architecture.

Key Features

Persistent Storage with LanceDB or FAISS

Move beyond memory-only caches. Store embeddings and responses in a fast, queryable format across sessions, ideal for long-lived apps or team environments.

Configurable Similarity Thresholds

Set a default or dynamically tune thresholds to match how precise you want cache matches to be. Looser for creative tasks, stricter for factual Q&A.

Pluggable Embedder System

Supports FastEmbed out-of-the-box, with a simple interface to plug in OpenAI, HuggingFace, or even local sentence transformers.

Metadata Tracking

Each cache entry stores creation time, usage count, similarity score, and more—paving the way for intelligent cache management and analysis.

Multiple Storage Backends

Run entirely in memory for development, or choose a persistent backend (e.g., LanceDB, FAISS). Ideal for flexibility between local prototyping and production deployment.

Async and Sync Operation Modes

Support for both synchronous and asynchronous workflows—adapt to your app’s architecture and performance model.

Logging & Metrics

Get insight into cache performance with logs and stats, including hit rates, fallback frequency, and LLM invocation counts.

What Makes This Cache “Enhanced”?

The modular design allows developers to use only the parts they need while leaving room for growth. For example:

- Use it as a plug-in module in your FastAPI app

- Deploy it behind an API gateway as a microservice

- Integrate it directly into agent pipelines and RAG systems

It’s designed for developers, ML engineers, and architects looking for semantic recall without unnecessary compute cost.

Designed for Experimentation & Evolution

This enhanced cache is not a closed, black-box tool—it’s an evolving open-source project with extensibility at its core. It’s deliberately transparent, allowing others to inspect, modify, and build upon the caching logic.

I invite developers and AI practitioners to:

- Clone it

- Modify it

- Extend it

- Contribute ideas and improvements